Exam Details

Exam Code

:DATABRICKS-MACHINE-LEARNING-ASSOCIATEExam Name

:Databricks Certified Machine Learning AssociateCertification

:Databricks CertificationsVendor

:DatabricksTotal Questions

:74 Q&AsLast Updated

:Jun 25, 2025

Databricks Databricks Certifications DATABRICKS-MACHINE-LEARNING-ASSOCIATE Questions & Answers

-

Question 61:

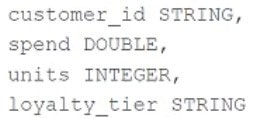

A data scientist is working with a feature set with the following schema:

Thecustomer_idcolumn is the primary key in the feature set. Each of the columns in the feature set has missing values. They want to replace the missing values by imputing a common value for each feature.

Which of the following lists all of the columns in the feature set that need to be imputed using the most common value of the column?

A. customer_id, loyalty_tier

B. loyalty_tier

C. units

D. spend

E. customer_id

-

Question 62:

A data scientist has replaced missing values in their feature set with each respective feature variable's median value. A colleague suggests that the data scientist is throwing away valuable information by doing this.

Which of the following approaches can they take to include as much information as possible in the feature set?

A. Impute the missing values using each respective feature variable's mean value instead of the median value

B. Refrain from imputing the missing values in favor of letting the machine learning algorithm determine how to handle them

C. Remove all feature variables that originally contained missing values from the feature set

D. Create a binary feature variable for each feature that contained missing values indicating whether each row's value has been imputed

E. Create a constant feature variable for each feature that contained missing values indicating the percentage of rows from the feature that was originally missing

-

Question 63:

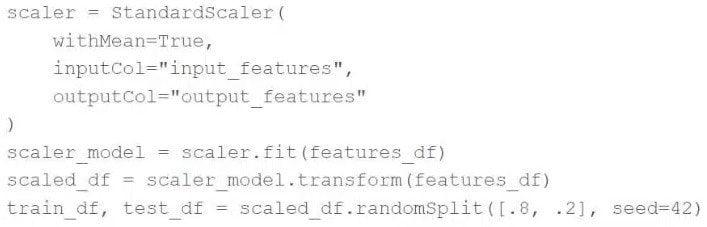

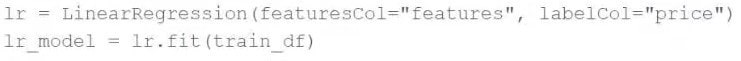

A data scientist is using Spark ML to engineer features for an exploratory machine learning project.

They decide they want to standardize their features using the following code block: Upon code review, a colleague expressed concern with the features being standardized prior to splitting the data into a training set and a test set.

Which of the following changes can the data scientist make to address the concern?

A. Utilize the MinMaxScaler object to standardize the training data according to global minimum and maximum values

B. Utilize the MinMaxScaler object to standardize the test data according to global minimum and maximum values

C. Utilize a cross-validation process rather than a train-test split process to remove the need for standardizing data

D. Utilize the Pipeline API to standardize the training data according to the test data's summary statistics

E. Utilize the Pipeline API to standardize the test data according to the training data's summary statistics

-

Question 64:

Which of the following describes the relationship between native Spark DataFrames and pandas API on Spark DataFrames?

A. pandas API on Spark DataFrames are single-node versions of Spark DataFrames with additional metadata

B. pandas API on Spark DataFrames are more performant than Spark DataFrames

C. pandas API on Spark DataFrames are made up of Spark DataFrames and additional metadata

D. pandas API on Spark DataFrames are less mutable versions of Spark DataFrames

-

Question 65:

A data scientist has developed a random forest regressor rfr and included it as the final stage in a Spark MLPipeline pipeline. They then set up a cross-validation process with pipeline as the estimator in the following code block:

Which of the following is a negative consequence of includingpipelineas the estimator in the cross-validation process rather thanrfras the estimator?

A. The process will have a longer runtime because all stages of pipeline need to be refit or retransformed with each mode

B. The process will leak data from the training set to the test set during the evaluation phase

C. The process will be unable to parallelize tuning due to the distributed nature of pipeline

D. The process will leak data prep information from the validation sets to the training sets for each model

-

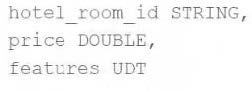

Question 66:

A machine learning engineer would like to develop a linear regression model with Spark ML to predict the price of a hotel room. They are using the Spark DataFrametrain_dfto train the model.

The Spark DataFrametrain_dfhas the following schema:

The machine learning engineer shares the following code block:

Which of the following changes does the machine learning engineer need to make to complete the task?

A. They need to call the transform method on train df

B. They need to convert the features column to be a vector

C. They do not need to make any changes

D. They need to utilize a Pipeline to fit the model

E. They need to split thefeaturescolumn out into one column for each feature

-

Question 67:

Which of the Spark operations can be used to randomly split a Spark DataFrame into a training DataFrame and a test DataFrame for downstream use?

A. TrainValidationSplit

B. DataFrame.where

C. CrossValidator

D. TrainValidationSplitModel

E. DataFrame.randomSplit

-

Question 68:

A data scientist has written a data cleaning notebook that utilizes the pandas library, but their colleague has suggested that they refactor their notebook to scale with big data.

Which of the following approaches can the data scientist take to spend the least amount of time refactoring their notebook to scale with big data?

A. They can refactor their notebook to process the data in parallel.

B. They can refactor their notebook to use the PySpark DataFrame API.

C. They can refactor their notebook to use the Scala Dataset API.

D. They can refactor their notebook to use Spark SQL.

E. They can refactor their notebook to utilize the pandas API on Spark.

-

Question 69:

The implementation of linear regression in Spark ML first attempts to solve the linear regression problem using matrix decomposition, but this method does not scale well to large datasets with a large number of variables.

Which of the following approaches does Spark ML use to distribute the training of a linear regression model for large data?

A. Logistic regression

B. Spark ML cannot distribute linear regression training

C. Iterative optimization

D. Least-squares method

E. Singular value decomposition

-

Question 70:

A data scientist has created two linear regression models. The first model uses price as a label variable and the second model uses log(price) as a label variable. When evaluating the RMSE of each model bycomparing the label predictions to the actual price values, the data scientist notices that the RMSE for the second model is much larger than the RMSE of the first model.

Which of the following possible explanations for this difference is invalid?

A. The second model is much more accurate than the first model

B. The data scientist failed to exponentiate the predictions in the second model prior tocomputingthe RMSE

C. The datascientist failed to take the logof the predictions in the first model prior to computingthe RMSE

D. The first model is much more accurate than the second model

E. The RMSE is an invalid evaluation metric for regression problems

Related Exams:

DATABRICKS-CERTIFIED-ASSOCIATE-DEVELOPER-FOR-APACHE-SPARK

Databricks Certified Associate Developer for Apache Spark 3.0DATABRICKS-CERTIFIED-DATA-ANALYST-ASSOCIATE

Databricks Certified Data Analyst AssociateDATABRICKS-CERTIFIED-DATA-ENGINEER-ASSOCIATE

Databricks Certified Data Engineer AssociateDATABRICKS-CERTIFIED-GENERATIVE-AI-ENGINEER-ASSOCIATE

Databricks Certified Generative AI Engineer AssociateDATABRICKS-CERTIFIED-PROFESSIONAL-DATA-ENGINEER

Databricks Certified Data Engineer ProfessionalDATABRICKS-CERTIFIED-PROFESSIONAL-DATA-SCIENTIST

Databricks Certified Professional Data ScientistDATABRICKS-MACHINE-LEARNING-ASSOCIATE

Databricks Certified Machine Learning AssociateDATABRICKS-MACHINE-LEARNING-PROFESSIONAL

Databricks Certified Machine Learning Professional

Tips on How to Prepare for the Exams

Nowadays, the certification exams become more and more important and required by more and more enterprises when applying for a job. But how to prepare for the exam effectively? How to prepare for the exam in a short time with less efforts? How to get a ideal result and how to find the most reliable resources? Here on Vcedump.com, you will find all the answers. Vcedump.com provide not only Databricks exam questions, answers and explanations but also complete assistance on your exam preparation and certification application. If you are confused on your DATABRICKS-MACHINE-LEARNING-ASSOCIATE exam preparations and Databricks certification application, do not hesitate to visit our Vcedump.com to find your solutions here.