Exam Details

Exam Code

:DATABRICKS-MACHINE-LEARNING-ASSOCIATEExam Name

:Databricks Certified Machine Learning AssociateCertification

:Databricks CertificationsVendor

:DatabricksTotal Questions

:74 Q&AsLast Updated

:Jun 25, 2025

Databricks Databricks Certifications DATABRICKS-MACHINE-LEARNING-ASSOCIATE Questions & Answers

-

Question 11:

A machine learning engineer is trying to perform batch model inference. They want to get predictions using the linear regression model saved at the pathmodel_urifor the DataFramebatch_df.

batch_dfhas the following schema:

customer_id STRING

The machine learning engineer runs the following code block to perform inference onbatch_dfusing the linear regression model atmodel_uri:

In which situation will the machine learning engineer's code block perform the desired inference?

A. When the Feature Store feature set was logged with the model at model_uri

B. When all of the features used by the model at model_uri are in a Spark DataFrame in the PySpark

C. When the model at model_uri only uses customer_id as a feature

D. This code block will not perform the desired inference in any situation.

E. When all of the features used by the model at model_uri are in a single Feature Store table

-

Question 12:

An organization is developing a feature repository and is electing to one-hot encode all categorical feature variables. A data scientist suggests that the categorical feature variables should not be one-hot encoded within the feature repository.

Which of the following explanations justifies this suggestion?

A. One-hot encoding is not supported by most machine learning libraries.

B. One-hot encoding is dependent on the target variable's values which differ for each application.

C. One-hot encoding is computationally intensive and should only be performed on small samples of training sets for individual machine learning problems.

D. One-hot encoding is not a common strategy for representing categorical feature variables numerically.

E. One-hot encoding is a potentially problematic categorical variable strategy for some machine learning algorithms.

-

Question 13:

A data scientist has developed a linear regression model using Spark ML and computed the predictions in a Spark DataFrame preds_df with the following schema:

prediction DOUBLE actual DOUBLE

Which of the following code blocks can be used to compute the root mean-squared-error of the model according to the data in preds_df and assign it to the rmse variable?

A. Option A

B. Option B

C. Option C

D. Option D

-

Question 14:

A data scientist has been given an incomplete notebook from the data engineering team. The notebook uses a Spark DataFrame spark_df on which the data scientist needs to perform further feature engineering. Unfortunately, the data scientist has not yet learned the PySpark DataFrame API.

Which of the following blocks of code can the data scientist run to be able to use the pandas API on Spark?

A. import pyspark.pandas as ps df = ps.DataFrame(spark_df)

B. import pyspark.pandas as ps df = ps.to_pandas(spark_df)

C. spark_df.to_pandas()

D. import pandas as pd df = pd.DataFrame(spark_df)

-

Question 15:

Which of the following machine learning algorithms typically uses bagging?

A. Gradient boosted trees B. K-means

C. Random forest

D. Linear regression

E. Decision tree

-

Question 16:

A machine learning engineer is trying to scale a machine learning pipeline by distributing its single-node model tuning process. After broadcasting the entire training data onto each core, each core in the cluster can train one model at a time. Because the tuning process is still running slowly, the engineer wants to increase the level of parallelism from 4 cores to 8 cores to speed up the tuning process. Unfortunately, the total memory in the cluster cannot be increased.

In which of the following scenarios will increasing the level of parallelism from 4 to 8 speed up the tuning process?

A. When the tuning process in randomized

B. When the entire data can fit on each core

C. When the model is unable to be parallelized

D. When the data is particularly long in shape

E. When the data is particularly wide in shape

-

Question 17:

A data scientist has written a feature engineering notebook that utilizes the pandas library. As the size of the data processed by the notebook increases, the notebook's runtime is drastically increasing, but it is processing slowly as the size of the data included in the process increases.

Which of the following tools can the data scientist use to spend the least amount of time refactoring their notebook to scale with big data?

A. PySpark DataFrame API

B. pandas API on Spark

C. Spark SQL

D. Feature Store

-

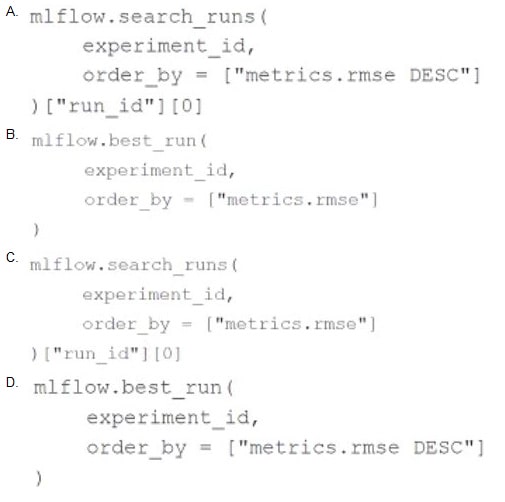

Question 18:

A data scientist is utilizing MLflow Autologging to automatically track their machine learning experiments. After completing a series of runs for the experiment experiment_id, the data scientist wants to identify the run_id of the run with the best root-mean-square error (RMSE).

Which of the following lines of code can be used to identify the run_id of the run with the best RMSE in experiment_id?

A. Option A

B. Option B

C. Option C

D. Option D

-

Question 19:

A machine learning engineering team has a Job with three successive tasks. Each task runs a single notebook. The team has been alerted that the Job has failed in its latest run.

Which of the following approaches can the team use to identify which task is the cause of the failure?

A. Run each notebook interactively

B. Review the matrix view in the Job's runs

C. Migrate the Job to a Delta Live Tables pipeline

D. Change each Task's setting to use a dedicated cluster

-

Question 20:

The implementation of linear regression in Spark ML first attempts to solve the linear regression problem using matrix decomposition, but this method does not scale well to large datasets with a large number of variables.

Which of the following approaches does Spark ML use to distribute the training of a linear regression model for large data?

A. Logistic regression

B. Singular value decomposition

C. Iterative optimization

D. Least-squares method

Related Exams:

DATABRICKS-CERTIFIED-ASSOCIATE-DEVELOPER-FOR-APACHE-SPARK

Databricks Certified Associate Developer for Apache Spark 3.0DATABRICKS-CERTIFIED-DATA-ANALYST-ASSOCIATE

Databricks Certified Data Analyst AssociateDATABRICKS-CERTIFIED-DATA-ENGINEER-ASSOCIATE

Databricks Certified Data Engineer AssociateDATABRICKS-CERTIFIED-GENERATIVE-AI-ENGINEER-ASSOCIATE

Databricks Certified Generative AI Engineer AssociateDATABRICKS-CERTIFIED-PROFESSIONAL-DATA-ENGINEER

Databricks Certified Data Engineer ProfessionalDATABRICKS-CERTIFIED-PROFESSIONAL-DATA-SCIENTIST

Databricks Certified Professional Data ScientistDATABRICKS-MACHINE-LEARNING-ASSOCIATE

Databricks Certified Machine Learning AssociateDATABRICKS-MACHINE-LEARNING-PROFESSIONAL

Databricks Certified Machine Learning Professional

Tips on How to Prepare for the Exams

Nowadays, the certification exams become more and more important and required by more and more enterprises when applying for a job. But how to prepare for the exam effectively? How to prepare for the exam in a short time with less efforts? How to get a ideal result and how to find the most reliable resources? Here on Vcedump.com, you will find all the answers. Vcedump.com provide not only Databricks exam questions, answers and explanations but also complete assistance on your exam preparation and certification application. If you are confused on your DATABRICKS-MACHINE-LEARNING-ASSOCIATE exam preparations and Databricks certification application, do not hesitate to visit our Vcedump.com to find your solutions here.