Exam Details

Exam Code

:DATABRICKS-MACHINE-LEARNING-ASSOCIATEExam Name

:Databricks Certified Machine Learning AssociateCertification

:Databricks CertificationsVendor

:DatabricksTotal Questions

:74 Q&AsLast Updated

:Jun 25, 2025

Databricks Databricks Certifications DATABRICKS-MACHINE-LEARNING-ASSOCIATE Questions & Answers

-

Question 41:

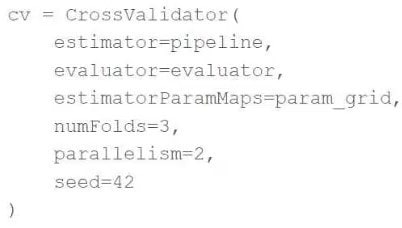

A machine learning engineer is trying to scale a machine learning pipelinepipelinethat contains multiple feature engineering stages and a modeling stage. As part of the cross-validation process, they are using the following code block:

A colleague suggests that the code block can be changed to speed up the tuning process by passing the model object to theestimatorparameter and then placing the updated cv object as the final stage of thepipelinein place of the original model.

Which of the following is a negative consequence of the approach suggested by the colleague?

A. The model will take longerto train for each unique combination of hvperparameter values

B. The feature engineering stages will be computed using validation data

C. The cross-validation process will no longer be

D. The cross-validation process will no longer be reproducible

E. The model will be refit one more per cross-validation fold

-

Question 42:

A data scientist has developed a linear regression model using Spark ML and computed the predictions in a Spark DataFrame preds_df with the following schema:

prediction DOUBLE actual DOUBLE Which of the following code blocks can be used to compute the root mean-squared-error of the model according to the data in preds_df and assign it to the rmse variable?

A. Option A

B. Option B

C. Option C

D. Option D

E. Option E

-

Question 43:

A data scientist is using Spark SQL to import their data into a machine learning pipeline. Once the data is imported, the data scientist performs machine learning tasks using Spark ML.

Which of the following compute tools is best suited for this use case?

A. Single Node cluster

B. Standard cluster

C. SQL Warehouse

D. None of these compute tools support this task

-

Question 44:

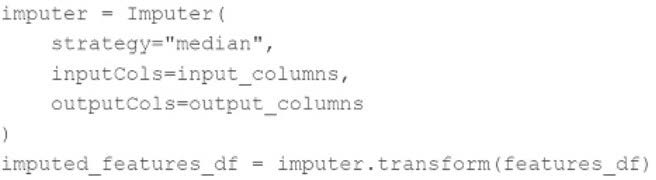

A data scientist wants to use Spark ML to impute missing values in their PySpark DataFrame features_df. They want to replace missing values in all numeric columns in features_df with each respective numeric column's median value.

They have developed the following code block to accomplish this task:

The code block is not accomplishing the task.

Which reasons describes why the code block is not accomplishing the imputation task?

A. It does not impute both the training and test data sets.

B. The inputCols and outputCols need to be exactly the same.

C. The fit method needs to be called instead of transform.

D. It does not fit the imputer on the data to create an ImputerModel.

-

Question 45:

A data scientist is performing hyperparameter tuning using an iterative optimization algorithm. Each evaluation of unique hyperparameter values is being trained on a single compute node. They are performing eight total evaluations across eight total compute nodes. While the accuracy of the model does vary over the eight evaluations, they notice there is no trend of improvement in the accuracy. The data scientist believes this is due to the parallelization of the tuning process.

Which change could the data scientist make to improve their model accuracy over the course of their tuning process?

A. Change the number of compute nodes to be half or less than half of the number of evaluations.

B. Change the number of compute nodes and the number of evaluations to be much larger but equal.

C. Change the iterative optimization algorithm used to facilitate the tuning process.

D. Change the number of compute nodes to be double or more than double the number of evaluations.

-

Question 46:

A machine learning engineer has created a Feature Table new_table using Feature Store Client fs. When creating the table, they specified a metadata description with key information about the Feature Table. They now want to retrieve that metadata programmatically.

Which of the following lines of code will return the metadata description?

A. There is no way to return the metadata description programmatically.

B. fs.create_training_set("new_table")

C. fs.get_table("new_table").description

D. fs.get_table("new_table").load_df()

E. fs.get_table("new_table")

-

Question 47:

A data scientist has been given an incomplete notebook from the data engineering team. The notebook uses a Spark DataFrame spark_df on which the data scientist needs to perform further feature engineering. Unfortunately, the data scientist has not yet learned the PySpark DataFrame API.

Which of the following blocks of code can the data scientist run to be able to use the pandas API on Spark?

A. import pyspark.pandas as ps df = ps.DataFrame(spark_df)

B. import pyspark.pandas as ps df = ps.to_pandas(spark_df)

C. spark_df.to_sql()

D. import pandas as pd df = pd.DataFrame(spark_df)

E. spark_df.to_pandas()

-

Question 48:

Which of the following describes the relationship between native Spark DataFrames and pandas API on Spark DataFrames?

A. pandas API on Spark DataFrames are single-node versions of Spark DataFrames with additional metadata

B. pandas API on Spark DataFrames are more performant than Spark DataFrames

C. pandas API on Spark DataFrames are made up of Spark DataFrames and additional metadata

D. pandas API on Spark DataFrames are less mutable versions of Spark DataFrames

E. pandas API on Spark DataFrames are unrelated to Spark DataFrames

-

Question 49:

A data scientist learned during their training to always use 5-fold cross-validation in their model development workflow. A colleague suggests that there are cases where a train-validation split could be preferred over k-fold cross-validation

when k > 2.

Which of the following describes a potential benefit of using a train-validation split over k-fold cross-validation in this scenario?

A. A holdout set is not necessary when using a train-validation split

B. Reproducibility is achievable when using a train-validation split

C. Fewer hyperparameter values need to be tested when usinga train-validation split

D. Bias is avoidable when using a train-validation split

E. Fewer models need to be trained when using a train-validation split

-

Question 50:

A data scientist uses 3-fold cross-validation and the following hyperparameter grid when optimizing model hyperparameters via grid search for a classification problem:

Hyperparameter 1: [2, 5, 10] Hyperparameter 2: [50, 100]

Which of the following represents the number of machine learning models that can be trained in parallel during this process?

A. 3

B. 5

C. 6

D. 18

Related Exams:

DATABRICKS-CERTIFIED-ASSOCIATE-DEVELOPER-FOR-APACHE-SPARK

Databricks Certified Associate Developer for Apache Spark 3.0DATABRICKS-CERTIFIED-DATA-ANALYST-ASSOCIATE

Databricks Certified Data Analyst AssociateDATABRICKS-CERTIFIED-DATA-ENGINEER-ASSOCIATE

Databricks Certified Data Engineer AssociateDATABRICKS-CERTIFIED-GENERATIVE-AI-ENGINEER-ASSOCIATE

Databricks Certified Generative AI Engineer AssociateDATABRICKS-CERTIFIED-PROFESSIONAL-DATA-ENGINEER

Databricks Certified Data Engineer ProfessionalDATABRICKS-CERTIFIED-PROFESSIONAL-DATA-SCIENTIST

Databricks Certified Professional Data ScientistDATABRICKS-MACHINE-LEARNING-ASSOCIATE

Databricks Certified Machine Learning AssociateDATABRICKS-MACHINE-LEARNING-PROFESSIONAL

Databricks Certified Machine Learning Professional

Tips on How to Prepare for the Exams

Nowadays, the certification exams become more and more important and required by more and more enterprises when applying for a job. But how to prepare for the exam effectively? How to prepare for the exam in a short time with less efforts? How to get a ideal result and how to find the most reliable resources? Here on Vcedump.com, you will find all the answers. Vcedump.com provide not only Databricks exam questions, answers and explanations but also complete assistance on your exam preparation and certification application. If you are confused on your DATABRICKS-MACHINE-LEARNING-ASSOCIATE exam preparations and Databricks certification application, do not hesitate to visit our Vcedump.com to find your solutions here.