Exam Details

Exam Code

:DATABRICKS-MACHINE-LEARNING-ASSOCIATEExam Name

:Databricks Certified Machine Learning AssociateCertification

:Databricks CertificationsVendor

:DatabricksTotal Questions

:74 Q&AsLast Updated

:Jun 25, 2025

Databricks Databricks Certifications DATABRICKS-MACHINE-LEARNING-ASSOCIATE Questions & Answers

-

Question 31:

A data scientist wants to parallelize the training of trees in a gradient boosted tree to speed up the training process. A colleague suggests that parallelizing a boosted tree algorithm can be difficult.

Which of the following describes why?

A. Gradient boosting is not a linear algebra-based algorithm which is required for parallelization

B. Gradient boosting requires access to all data at once which cannot happen during parallelization.

C. Gradient boosting calculates gradients in evaluation metrics using all cores which prevents parallelization.

D. Gradient boosting is an iterative algorithm that requires information from the previous iteration to perform the next step.

-

Question 32:

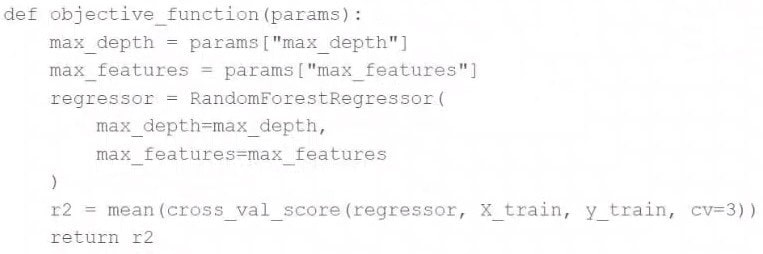

A data scientist wants to efficiently tune the hyperparameters of a scikit-learn model. They elect to use the Hyperopt library'sfminoperation to facilitate this process. Unfortunately, the final model is not very accurate. The data scientist suspects that there is an issue with theobjective_functionbeing passed as an argument tofmin.

They use the following code block to create theobjective_function:

Which of the following changes does the data scientist need to make to theirobjective_functionin order to produce a more accurate model?

A. Add test set validation process

B. Add a random_state argument to the RandomForestRegressor operation

C. Remove the mean operation that is wrapping the cross_val_score operation

D. Replace the r2 return value with-r2

E. Replace the fmin operation with the fmax operation

-

Question 33:

A data scientist wants to tune a set of hyperparameters for a machine learning model. They have wrapped a Spark ML model in the objective functionobjective_functionand they have defined the search spacesearch_space.

As a result, they have the following code block: Which of the following changes do they need to make to the above code block in order to accomplish the task?

A. Change SparkTrials() to Trials()

B. Reduce num_evals to be less than 10

C. Change fmin() to fmax()

D. Remove the trials=trials argument

E. Remove the algo=tpe.suggest argument

-

Question 34:

A data scientist is wanting to explore the Spark DataFrame spark_df. The data scientist wants visual histograms displaying the distribution of numeric features to be included in the exploration.

Which of the following lines of code can the data scientist run to accomplish the task?

A. spark_df.describe()

B. dbutils.data(spark_df).summarize()

C. This task cannot be accomplished in a single line of code.

D. spark_df.summary()

E. dbutils.data.summarize (spark_df)

-

Question 35:

A machine learning engineer has grown tired of needing to install the MLflow Python library on each of their clusters. They ask a senior machine learning engineer how their notebooks can load the MLflow library without installing it each time. The senior machine learning engineer suggests that they use Databricks Runtime for Machine Learning.

Which of the following approaches describes how the machine learning engineer can begin using Databricks Runtime for Machine Learning?

A. They can add a line enabling Databricks Runtime ML in their init script when creating their clusters.

B. They can check the Databricks Runtime ML box when creating their clusters.

C. They can select a Databricks Runtime ML version from the Databricks Runtime Version dropdown when creating their clusters.

D. They can set the runtime-version variable in their Spark session to "ml".

-

Question 36:

A health organization is developing a classification model to determine whether or not a patient currently has a specific type of infection. The organization's leaders want to maximize the number of positive cases identified by the model.

Which of the following classification metrics should be used to evaluate the model?

A. RMSE

B. Precision

C. Area under the residual operating curve

D. Accuracy

E. Recall

-

Question 37:

Which of the following approaches can be used to view the notebook that was run to create an MLflow run?

A. Open the MLmodel artifact in the MLflow run paqe

B. Click the "Models" link in the row corresponding to the run in the MLflow experiment paqe

C. Click the "Source" link in the row corresponding to the run in the MLflow experiment page

D. Click the "Start Time" link in the row corresponding to the run in the MLflow experiment page

-

Question 38:

A data scientist wants to efficiently tune the hyperparameters of a scikit-learn model in parallel. They elect to use the Hyperopt library to facilitate this process.

Which of the following Hyperopt tools provides the ability to optimize hyperparameters in parallel?

A. fmin

B. SparkTrials

C. quniform

D. search_space

E. objective_function

-

Question 39:

A data scientist has a Spark DataFrame spark_df. They want to create a new Spark DataFrame that contains only the rows from spark_df where the value in column price is greater than 0.

Which of the following code blocks will accomplish this task?

A. spark_df[spark_df["price"] > 0]

B. spark_df.filter(col("price") > 0)

C. SELECT * FROM spark_df WHERE price > 0

D. spark_df.loc[spark_df["price"] > 0,:]

E. spark_df.loc[:,spark_df["price"] > 0]

-

Question 40:

A data scientist has produced two models for a single machine learning problem. One of the models performs well when one of the features has a value of less than 5, and the other model performs well when the value of that feature is greater than or equal to 5. The data scientist decides to combine the two models into a single machine learning solution.

Which of the following terms is used to describe this combination of models?

A. Bootstrap aggregation

B. Support vector machines

C. Bucketing

D. Ensemble learning

E. Stacking

Related Exams:

DATABRICKS-CERTIFIED-ASSOCIATE-DEVELOPER-FOR-APACHE-SPARK

Databricks Certified Associate Developer for Apache Spark 3.0DATABRICKS-CERTIFIED-DATA-ANALYST-ASSOCIATE

Databricks Certified Data Analyst AssociateDATABRICKS-CERTIFIED-DATA-ENGINEER-ASSOCIATE

Databricks Certified Data Engineer AssociateDATABRICKS-CERTIFIED-GENERATIVE-AI-ENGINEER-ASSOCIATE

Databricks Certified Generative AI Engineer AssociateDATABRICKS-CERTIFIED-PROFESSIONAL-DATA-ENGINEER

Databricks Certified Data Engineer ProfessionalDATABRICKS-CERTIFIED-PROFESSIONAL-DATA-SCIENTIST

Databricks Certified Professional Data ScientistDATABRICKS-MACHINE-LEARNING-ASSOCIATE

Databricks Certified Machine Learning AssociateDATABRICKS-MACHINE-LEARNING-PROFESSIONAL

Databricks Certified Machine Learning Professional

Tips on How to Prepare for the Exams

Nowadays, the certification exams become more and more important and required by more and more enterprises when applying for a job. But how to prepare for the exam effectively? How to prepare for the exam in a short time with less efforts? How to get a ideal result and how to find the most reliable resources? Here on Vcedump.com, you will find all the answers. Vcedump.com provide not only Databricks exam questions, answers and explanations but also complete assistance on your exam preparation and certification application. If you are confused on your DATABRICKS-MACHINE-LEARNING-ASSOCIATE exam preparations and Databricks certification application, do not hesitate to visit our Vcedump.com to find your solutions here.