DATABRICKS-MACHINE-LEARNING-ASSOCIATE Exam Details

-

Exam Code

:DATABRICKS-MACHINE-LEARNING-ASSOCIATE -

Exam Name

:Databricks Certified Machine Learning Associate -

Certification

:Databricks Certifications -

Vendor

:Databricks -

Total Questions

:74 Q&As -

Last Updated

:Jan 10, 2026

Databricks DATABRICKS-MACHINE-LEARNING-ASSOCIATE Online Questions & Answers

-

Question 1:

A machine learning engineer has been notified that a new Staging version of a model registered to the MLflow Model Registry has passed all tests. As a result, the machine learning engineer wants to put this model into production by transitioning it to the Production stage in the Model Registry.

From which of the following pages in Databricks Machine Learning can the machine learning engineer accomplish this task?

A. The home page of the MLflow Model Registry

B. The experiment page in the Experiments observatory

C. The model version page in the MLflow ModelRegistry

D. The model page in the MLflow Model Registry -

Question 2:

A data scientist is developing a machine learning pipeline using AutoML on Databricks Machine Learning.

Which of the following steps will the data scientist need to perform outside of their AutoML experiment?

A. Model tuning

B. Model evaluation

C. Model deployment

D. Exploratory data analysis -

Question 3:

Which of the following tools can be used to distribute large-scale feature engineering without the use of a UDF or pandas Function API for machine learning pipelines?

A. Keras

B. pandas

C. PvTorch

D. Spark ML

E. Scikit-learn -

Question 4:

What is the name of the method that transforms categorical features into a series of binary indicator feature variables?

A. Leave-one-out encoding

B. Target encoding

C. One-hot encoding

D. Categorical

E. String indexing -

Question 5:

A new data scientist has started working on an existing machine learning project. The project is a scheduled Job that retrains every day. The project currently exists in a Repo in Databricks. The data scientist has been tasked with improving the feature engineering of the pipeline's preprocessing stage. The data scientist wants to make necessary updates to the code that can be easily adopted into the project without changing what is being run each day.

Which approach should the data scientist take to complete this task?

A. They can create a new branch in Databricks, commit their changes, and push those changes to the Git provider.

B. They can clone the notebooks in the repository into a Databricks Workspace folder and make the necessary changes.

C. They can create a new Git repository, import it into Databricks, and copy and paste the existing code from the original repository before making changes.

D. They can clone the notebooks in the repository into a new Databricks Repo and make the necessary changes. -

Question 6:

Which of the following tools can be used to distribute large-scale feature engineering without the use of a UDF or pandas Function API for machine learning pipelines?

A. Keras

B. Scikit-learn

C. PyTorch

D. Spark ML -

Question 7:

A machine learning engineer is trying to scale a machine learning pipeline by distributing its feature engineering process.

Which of the following feature engineering tasks will be the least efficient to distribute?

A. One-hot encoding categorical features

B. Target encoding categorical features

C. Imputing missing feature values with the mean

D. Imputing missing feature values with the true median

E. Creating binary indicator features for missing values -

Question 8:

In which of the following situations is it preferable to impute missing feature values with their median value over the mean value?

A. When the features are of the categorical type

B. When the features are of the boolean type

C. When the features contain a lot of extreme outliers

D. When the features contain no outliers

E. When the features contain no missingno values -

Question 9:

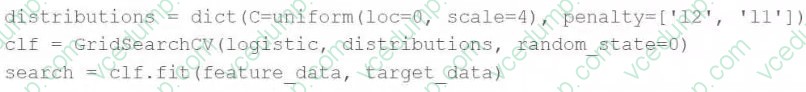

A data scientist is attempting to tune a logistic regression model logistic using scikit-learn. They want to specify a search space for two hyperparameters and let the tuning process randomly select values for each evaluation.

They attempt to run the following code block, but it does not accomplish the desired task:

Which of the following changes can the data scientist make to accomplish the task?

A. Replace the GridSearchCV operation with RandomizedSearchCV

B. Replace the GridSearchCV operation with cross_validate

C. Replace the GridSearchCV operation with ParameterGrid

D. Replace the random_state=0 argument with random_state=1

E. Replace the penalty= ['12', '11'] argument with penalty=uniform ('12', '11') -

Question 10:

A team is developing guidelines on when to use various evaluation metrics for classification problems. The team needs to provide input on when to use the F1 score over accuracy.

Which of the following suggestions should the team include in their guidelines?

A. The F1 score should be utilized over accuracy when the number of actual positive cases is identical to the number of actual negative cases.

B. The F1 score should be utilized over accuracy when there are greater than two classes in the target variable.

C. The F1 score should be utilized over accuracy when there is significant imbalance between positive and negative classes and avoiding false negatives is a priority.

D. The F1 score should be utilized over accuracy when identifying true positives and true negatives are equally important to the business problem.

Related Exams:

-

DATABRICKS-CERTIFIED-ASSOCIATE-DEVELOPER-FOR-APACHE-SPARK

Databricks Certified Associate Developer for Apache Spark 3.0 -

DATABRICKS-CERTIFIED-ASSOCIATE-DEVELOPER-FOR-APACHE-SPARK-35

Databricks Certified Associate Developer for Apache Spark 3.5-Python -

DATABRICKS-CERTIFIED-DATA-ANALYST-ASSOCIATE

Databricks Certified Data Analyst Associate -

DATABRICKS-CERTIFIED-DATA-ENGINEER-ASSOCIATE

Databricks Certified Data Engineer Associate -

DATABRICKS-CERTIFIED-GENERATIVE-AI-ENGINEER-ASSOCIATE

Databricks Certified Generative AI Engineer Associate -

DATABRICKS-CERTIFIED-PROFESSIONAL-DATA-ENGINEER

Databricks Certified Data Engineer Professional -

DATABRICKS-CERTIFIED-PROFESSIONAL-DATA-SCIENTIST

Databricks Certified Professional Data Scientist -

DATABRICKS-MACHINE-LEARNING-ASSOCIATE

Databricks Certified Machine Learning Associate -

DATABRICKS-MACHINE-LEARNING-PROFESSIONAL

Databricks Certified Machine Learning Professional

Tips on How to Prepare for the Exams

Nowadays, the certification exams become more and more important and required by more and more enterprises when applying for a job. But how to prepare for the exam effectively? How to prepare for the exam in a short time with less efforts? How to get a ideal result and how to find the most reliable resources? Here on Vcedump.com, you will find all the answers. Vcedump.com provide not only Databricks exam questions, answers and explanations but also complete assistance on your exam preparation and certification application. If you are confused on your DATABRICKS-MACHINE-LEARNING-ASSOCIATE exam preparations and Databricks certification application, do not hesitate to visit our Vcedump.com to find your solutions here.