CKS Exam Details

-

Exam Code

:CKS -

Exam Name

:Linux Foundation Certified Kubernetes Security Specialist (CKS) -

Certification

:Linux Foundation Certifications -

Vendor

:Linux Foundation -

Total Questions

:46 Q&As -

Last Updated

:Jan 14, 2026

Linux Foundation CKS Online Questions & Answers

-

Question 1:

A container image scanner is set up on the cluster.

Given an incomplete configuration in the directory

/etc/kubernetes/confcontrol and a functional container image scanner with HTTPS endpoint https://test-server.local.8081/image_policy

1. Enable the admission plugin.

2. Validate the control configuration and change it to implicit deny.

Finally, test the configuration by deploying the pod having the image tag as latest.

-

Question 2:

Enable audit logs in the cluster, To Do so, enable the log backend, and ensure that

1. logs are stored at /var/log/kubernetes-logs.txt.

2. Log files are retained for 12 days.

3. at maximum, a number of 8 old audit logs files are retained.

4. set the maximum size before getting rotated to 200MB

Edit and extend the basic policy to log:

1. namespaces changes at RequestResponse

2. Log the request body of secrets changes in the namespace kube-system.

3. Log all other resources in core and extensions at the Request level.

4. Log "pods/portforward", "services/proxy" at Metadata level.

5. Omit the Stage RequestReceived

All other requests at the Metadata level

-

Question 3:

CORRECT TEXT

Task

Create a NetworkPolicy named pod-access to restrict access to Pod users-service running in namespace dev-team. Only allow the following Pods to connect to Pod users-service:

1. Pods in the namespace qa

2. Pods with label environment: testing, in any namespace

-

Question 4:

Create a RuntimeClass named gvisor-rc using the prepared runtime handler named runsc.

Create a Pods of image Nginx in the Namespace server to run on the gVisor runtime class

-

Question 5:

CORRECT TEXT Context

A default-deny NetworkPolicy avoids to accidentally expose a Pod in a namespace that doesn't have any other NetworkPolicy defined.

Task

Create a new default-deny NetworkPolicy named defaultdeny in the namespace testing for all traffic of type Egress.

The new NetworkPolicy must deny all Egress traffic in the namespace testing.

Apply the newly created default-deny NetworkPolicy to all Pods running in namespace testing.

-

Question 6:

CORRECT TEXT

Context

This cluster uses containerd as CRI runtime.

Containerd's default runtime handler is runc. Containerd has been prepared to support an additional runtime handler, runsc (gVisor).

Task

Create a RuntimeClass named sandboxed using the prepared runtime handler named runsc.

Update all Pods in the namespace server to run on gVisor.

-

Question 7:

Create a RuntimeClass named untrusted using the prepared runtime handler named runsc.

Create a Pods of image alpine:3.13.2 in the Namespace default to run on the gVisor runtime class.

-

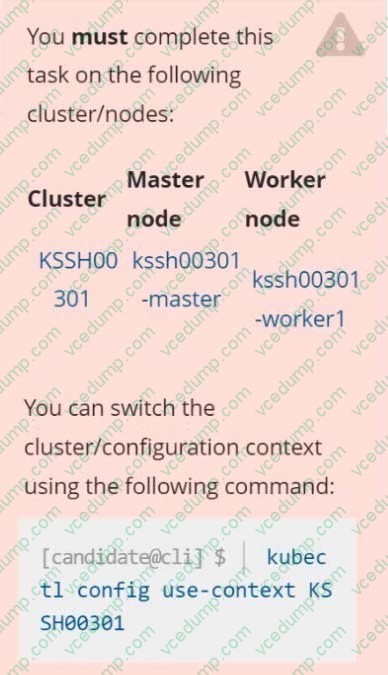

Question 8:

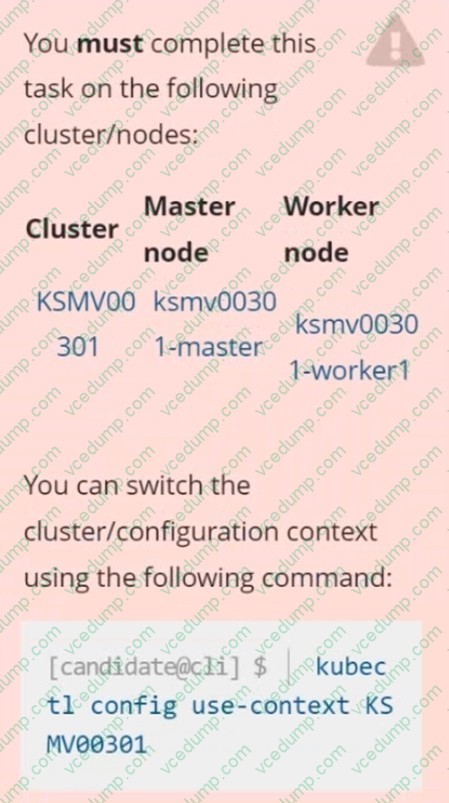

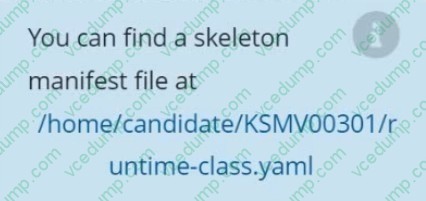

You must complete this task on the following cluster/nodes:

Cluster: trace Master node: master Worker node: worker1

You can switch the cluster/configuration context using the following command:

[desk@cli] $ kubectl config use-context trace

Given: You may use Sysdig or Falco documentation.

Task:

Use detection tools to detect anomalies like processes spawning and executing something weird frequently in the single container belonging to Pod tomcat.

Two tools are available to use:

1. falco

2. sysdig

Tools are pre-installed on the worker1 node only.

Analyse the container's behaviour for at least 40 seconds, using filters that detect newly spawning and executing processes.

Store an incident file at /home/cert_masters/report, in the following format:

[timestamp],[uid],[processName]

Note: Make sure to store incident file on the cluster's worker node, don't move it to master node.

-

Question 9:

Create a User named john, create the CSR Request, fetch the certificate of the user after approving it.

Create a Role name john-role to list secrets, pods in namespace john

Finally, Create a RoleBinding named john-role-binding to attach the newly created role john-role to the user john in the namespace john.

To Verify: Use the kubectl auth CLI command to verify the permissions.

-

Question 10:

Use the kubesec docker images to scan the given YAML manifest, edit and apply the advised changes, and passed with a score of 4 points.

kubesec-test.yaml

1. apiVersion: v1

2. kind: Pod

3. metadata:

4. name: kubesec-demo

5. spec:

6. containers:

7. - name: kubesec-demo

8. image: gcr.io/google-samples/node-hello:1.0

9. securityContext:

10.readOnlyRootFilesystem: true

Hint: docker run -i kubesec/kubesec:512c5e0 scan /dev/stdin < kubesec-test.yaml

Related Exams:

-

CKA

Linux Foundation Certified Kubernetes Administrator (CKA) -

CKAD

Linux Foundation Certified Kubernetes Application Developer (CKAD) -

CKS

Linux Foundation Certified Kubernetes Security Specialist (CKS) -

HFCP

Linux Foundation Certified Hyperledger Fabric Certified Practitioner (HFCP) -

KCNA

Linux Foundation Certified Kubernetes and Cloud Native Associate (KCNA) -

LFCA

Linux Foundation Certified IT Associate (LFCA) -

LFCS

Linux Foundation Certified System Administrator (LFCS)

Tips on How to Prepare for the Exams

Nowadays, the certification exams become more and more important and required by more and more enterprises when applying for a job. But how to prepare for the exam effectively? How to prepare for the exam in a short time with less efforts? How to get a ideal result and how to find the most reliable resources? Here on Vcedump.com, you will find all the answers. Vcedump.com provide not only Linux Foundation exam questions, answers and explanations but also complete assistance on your exam preparation and certification application. If you are confused on your CKS exam preparations and Linux Foundation certification application, do not hesitate to visit our Vcedump.com to find your solutions here.