Exam Details

Exam Code

:DATABRICKS-CERTIFIED-DATA-ENGINEER-ASSOCIATEExam Name

:Databricks Certified Data Engineer AssociateCertification

:Databricks CertificationsVendor

:DatabricksTotal Questions

:132 Q&AsLast Updated

:Jul 02, 2025

Databricks Databricks Certifications DATABRICKS-CERTIFIED-DATA-ENGINEER-ASSOCIATE Questions & Answers

-

Question 61:

A single Job runs two notebooks as two separate tasks. A data engineer has noticed that one of the notebooks is running slowly in the Job's current run. The data engineer asks a tech lead for help in identifying why this might be the case. Which of the following approaches can the tech lead use to identify why the notebook is running slowly as part of the Job?

A. They can navigate to the Runs tab in the Jobs UI to immediately review the processing notebook.

B. They can navigate to the Tasks tab in the Jobs UI and click on the active run to review the processing notebook.

C. They can navigate to the Runs tab in the Jobs UI and click on the active run to review the processing notebook.

D. There is no way to determine why a Job task is running slowly.

E. They can navigate to the Tasks tab in the Jobs UI to immediately review the processing notebook.

-

Question 62:

A data engineer has been given a new record of data:

id STRING = 'a1'

rank INTEGER = 6

rating FLOAT = 9.4

Which of the following SQL commands can be used to append the new record to an existing Delta table my_table?

A. INSERT INTO my_table VALUES ('a1', 6, 9.4)

B. my_table UNION VALUES ('a1', 6, 9.4)

C. INSERT VALUES ( 'a1' , 6, 9.4) INTO my_table

D. UPDATE my_table VALUES ('a1', 6, 9.4)

E. UPDATE VALUES ('a1', 6, 9.4) my_table

-

Question 63:

A data engineer has left the organization. The data team needs to transfer ownership of the data engineer's Delta tables to a new data engineer. The new data engineer is the lead engineer on the data team. Assuming the original data engineer no longer has access, which of the following individuals must be the one to transfer ownership of the Delta tables in Data Explorer?

A. Databricks account representative

B. This transfer is not possible

C. Workspace administrator

D. New lead data engineer

E. Original data engineer

-

Question 64:

A data engineer and data analyst are working together on a data pipeline. The data engineer is working on the raw, bronze, and silver layers of the pipeline using Python, and the data analyst is working on the gold layer of the pipeline using SQL. The raw source of the pipeline is a streaming input. They now want to migrate their pipeline to use Delta Live Tables.

Which of the following changes will need to be made to the pipeline when migrating to Delta Live Tables?

A. None of these changes will need to be made

B. The pipeline will need to stop using the medallion-based multi-hop architecture

C. The pipeline will need to be written entirely in SQL

D. The pipeline will need to use a batch source in place of a streaming source

E. The pipeline will need to be written entirely in Python

-

Question 65:

Which of the following data workloads will utilize a Gold table as its source?

A. A job that enriches data by parsing its timestamps into a human-readable format

B. A job that aggregates uncleaned data to create standard summary statistics

C. A job that cleans data by removing malformatted records

D. A job that queries aggregated data designed to feed into a dashboard

E. A job that ingests raw data from a streaming source into the Lakehouse

-

Question 66:

A Delta Live Table pipeline includes two datasets defined using STREAMING LIVE TABLE. Three datasets are defined against Delta Lake table sources using LIVE TABLE. The table is configured to run in Development mode using the Continuous Pipeline Mode.

Assuming previously unprocessed data exists and all definitions are valid, what is the expected outcome after clicking Start to update the pipeline?

A. All datasets will be updated once and the pipeline will shut down. The compute resources will be terminated.

B. All datasets will be updated at set intervals until the pipeline is shut down. The compute resources will persist until the pipeline is shut down.

C. All datasets will be updated once and the pipeline will persist without any processing. The compute resources will persist but go unused.

D. All datasets will be updated once and the pipeline will shut down. The compute resources will persist to allow for additional testing.

E. All datasets will be updated at set intervals until the pipeline is shut down. The compute resources will persist to allow for additional testing.

-

Question 67:

A dataset has been defined using Delta Live Tables and includes an expectations clause:

CONSTRAINT valid_timestamp EXPECT (timestamp > '2020-01-01') ON VIOLATION FAIL UPDATE

What is the expected behavior when a batch of data containing data that violates these constraints is processed?

A. Records that violate the expectation are dropped from the target dataset and recorded as invalid in the event log.

B. Records that violate the expectation cause the job to fail.

C. Records that violate the expectation are dropped from the target dataset and loaded into a quarantine table.

D. Records that violate the expectation are added to the target dataset and recorded as invalid in the event log.

E. Records that violate the expectation are added to the target dataset and flagged as invalid in a field added to the target dataset.

-

Question 68:

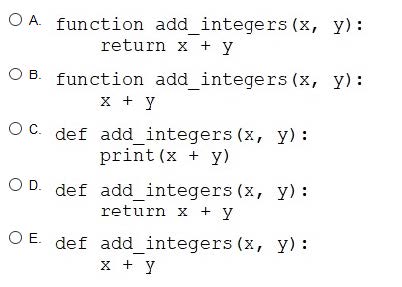

A data engineer that is new to using Python needs to create a Python function to add two integers together and return the sum? Which of the following code blocks can the data engineer use to complete this task?

A. Option A

B. Option B

C. Option C

D. Option D

E. Option E

-

Question 69:

An engineering manager uses a Databricks SQL query to monitor ingestion latency for each data source. The manager checks the results of the query every day, but they are manually rerunning the query each day and waiting for the results.

Which of the following approaches can the manager use to ensure the results of the query are updated each day?

A. They can schedule the query to refresh every 1 day from the SQL endpoint's page in Databricks SQL.

B. They can schedule the query to refresh every 12 hours from the SQL endpoint's page in Databricks SQL.

C. They can schedule the query to refresh every 1 day from the query's page in Databricks SQL.

D. They can schedule the query to run every 1 day from the Jobs UI.

E. They can schedule the query to run every 12 hours from the Jobs UI.

-

Question 70:

In which of the following scenarios should a data engineer use the MERGE INTO command instead of the INSERT INTO command?

A. When the location of the data needs to be changed

B. When the target table is an external table

C. When the source table can be deleted

D. When the target table cannot contain duplicate records

E. When the source is not a Delta table

Related Exams:

DATABRICKS-CERTIFIED-ASSOCIATE-DEVELOPER-FOR-APACHE-SPARK

Databricks Certified Associate Developer for Apache Spark 3.0DATABRICKS-CERTIFIED-DATA-ANALYST-ASSOCIATE

Databricks Certified Data Analyst AssociateDATABRICKS-CERTIFIED-DATA-ENGINEER-ASSOCIATE

Databricks Certified Data Engineer AssociateDATABRICKS-CERTIFIED-GENERATIVE-AI-ENGINEER-ASSOCIATE

Databricks Certified Generative AI Engineer AssociateDATABRICKS-CERTIFIED-PROFESSIONAL-DATA-ENGINEER

Databricks Certified Data Engineer ProfessionalDATABRICKS-CERTIFIED-PROFESSIONAL-DATA-SCIENTIST

Databricks Certified Professional Data ScientistDATABRICKS-MACHINE-LEARNING-ASSOCIATE

Databricks Certified Machine Learning AssociateDATABRICKS-MACHINE-LEARNING-PROFESSIONAL

Databricks Certified Machine Learning Professional

Tips on How to Prepare for the Exams

Nowadays, the certification exams become more and more important and required by more and more enterprises when applying for a job. But how to prepare for the exam effectively? How to prepare for the exam in a short time with less efforts? How to get a ideal result and how to find the most reliable resources? Here on Vcedump.com, you will find all the answers. Vcedump.com provide not only Databricks exam questions, answers and explanations but also complete assistance on your exam preparation and certification application. If you are confused on your DATABRICKS-CERTIFIED-DATA-ENGINEER-ASSOCIATE exam preparations and Databricks certification application, do not hesitate to visit our Vcedump.com to find your solutions here.