Exam Details

Exam Code

:DATABRICKS-CERTIFIED-DATA-ENGINEER-ASSOCIATEExam Name

:Databricks Certified Data Engineer AssociateCertification

:Databricks CertificationsVendor

:DatabricksTotal Questions

:132 Q&AsLast Updated

:Jul 02, 2025

Databricks Databricks Certifications DATABRICKS-CERTIFIED-DATA-ENGINEER-ASSOCIATE Questions & Answers

-

Question 11:

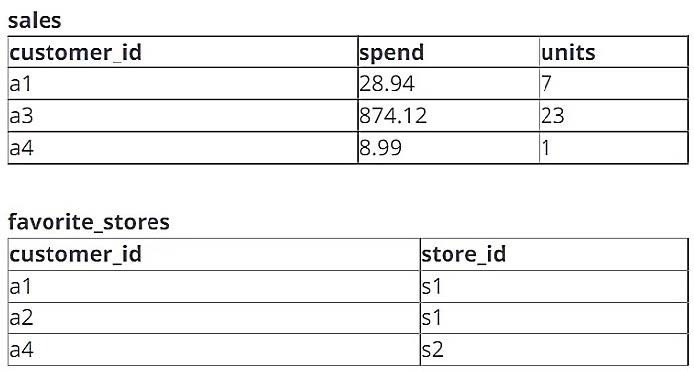

A data engineer is working with two tables. Each of these tables is displayed below in its entirety.

The data engineer runs the following query to join these tables together:

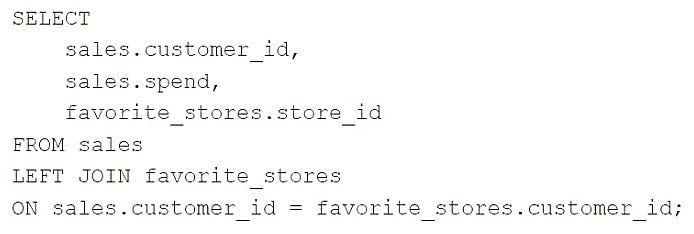

Which of the following will be returned by the above query?

A. Option A

B. Option B

C. Option C

D. Option D

E. Option E

-

Question 12:

Which of the following must be specified when creating a new Delta Live Tables pipeline?

A. A key-value pair configuration

B. The preferred DBU/hour cost

C. A path to cloud storage location for the written data

D. A location of a target database for the written data

E. At least one notebook library to be executed

-

Question 13:

A data engineer is attempting to drop a Spark SQL table my_table and runs the following command:

DROP TABLE IF EXISTS my_table;

After running this command, the engineer notices that the data files and metadata files have been deleted from the file system.

Which of the following describes why all of these files were deleted?

A. The table was managed

B. The table's data was smaller than 10 GB

C. The table's data was larger than 10 GB

D. The table was external

E. The table did not have a location

-

Question 14:

A data engineer is attempting to drop a Spark SQL table my_table. The data engineer wants to delete all table metadata and data.

They run the following command:

DROP TABLE IF EXISTS my_table

While the object no longer appears when they run SHOW TABLES, the data files still exist. Which of the following describes why the data files still exist and the metadata files were deleted?

A. The table's data was larger than 10 GB

B. The table's data was smaller than 10 GB

C. The table was external

D. The table did not have a location

E. The table was managed

-

Question 15:

A data organization leader is upset about the data analysis team's reports being different from the data engineering team's reports. The leader believes the siloed nature of their organization's data engineering and data analysis architectures is to blame.

Which of the following describes how a data lakehouse could alleviate this issue?

A. Both teams would autoscale their work as data size evolves

B. Both teams would use the same source of truth for their work

C. Both teams would reorganize to report to the same department

D. Both teams would be able to collaborate on projects in real-time

E. Both teams would respond more quickly to ad-hoc requests

-

Question 16:

A data analysis team has noticed that their Databricks SQL queries are running too slowly when connected to their always-on SQL endpoint. They claim that this issue is present when many members of the team are running small queries simultaneously. They ask the data engineering team for help. The data engineering team notices that each of the team's queries uses the same SQL endpoint.

Which of the following approaches can the data engineering team use to improve the latency of the team's queries?

A. They can increase the cluster size of the SQL endpoint.

B. They can increase the maximum bound of the SQL endpoint's scaling range.

C. They can turn on the Auto Stop feature for the SQL endpoint.

D. They can turn on the Serverless feature for the SQL endpoint.

E. They can turn on the Serverless feature for the SQL endpoint and change the Spot Instance Policy to "Reliability Optimized."

-

Question 17:

A data analyst has developed a query that runs against Delta table. They want help from the data engineering team to implement a series of tests to ensure the data returned by the query is clean. However, the data engineering team uses Python for its tests rather than SQL.

Which of the following operations could the data engineering team use to run the query and operate with the results in PySpark?

A. SELECT * FROM sales

B. spark.delta.table

C. spark.sql

D. There is no way to share data between PySpark and SQL.

E. spark.table

-

Question 18:

Which of the following describes when to use the CREATE STREAMING LIVE TABLE (formerly CREATE INCREMENTAL LIVE TABLE) syntax over the CREATE LIVE TABLE syntax when creating Delta Live Tables (DLT) tables using SQL?

A. CREATE STREAMING LIVE TABLE should be used when the subsequent step in the DLT pipeline is static.

B. CREATE STREAMING LIVE TABLE should be used when data needs to be processed incrementally.

C. CREATE STREAMING LIVE TABLE is redundant for DLT and it does not need to be used.

D. CREATE STREAMING LIVE TABLE should be used when data needs to be processed through complicated aggregations.

E. CREATE STREAMING LIVE TABLE should be used when the previous step in the DLT pipeline is static.

-

Question 19:

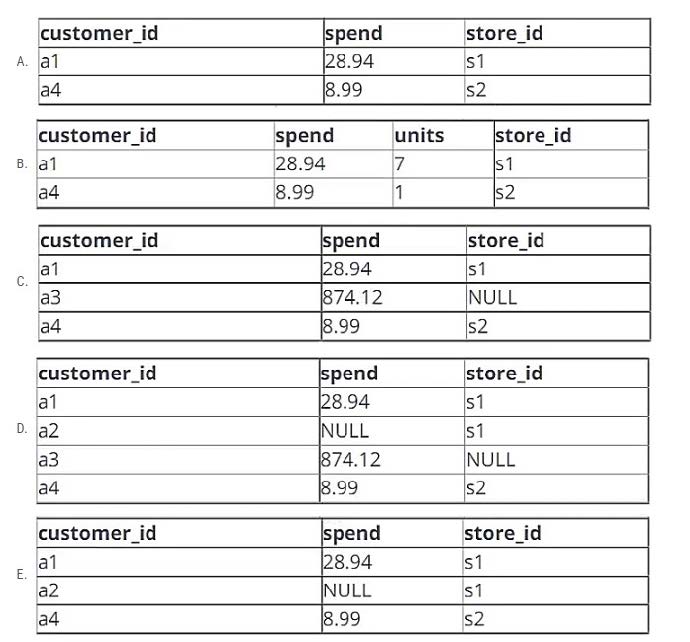

A data engineer needs to create a table in Databricks using data from their organization's existing SQLite database. They run the following command:

Which of the following lines of code fills in the above blank to successfully complete the task?

A. org.apache.spark.sql.jdbc

B. autoloader

C. DELTA

D. sqlite

E. org.apache.spark.sql.sqlite

-

Question 20:

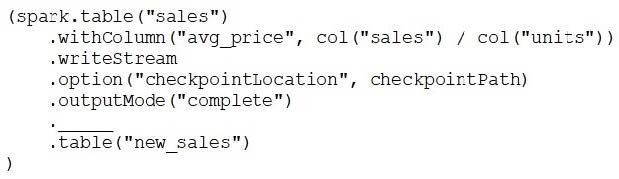

A data engineer has configured a Structured Streaming job to read from a table, manipulate the data, and then perform a streaming write into a new table.

The cade block used by the data engineer is below:

If the data engineer only wants the query to execute a micro-batch to process data every 5 seconds, which of the following lines of code should the data engineer use to fill in the blank?

A. trigger("5 seconds")

B. trigger()

C. trigger(once="5 seconds")

D. trigger(processingTime="5 seconds")

E. trigger(continuous="5 seconds")

Related Exams:

DATABRICKS-CERTIFIED-ASSOCIATE-DEVELOPER-FOR-APACHE-SPARK

Databricks Certified Associate Developer for Apache Spark 3.0DATABRICKS-CERTIFIED-DATA-ANALYST-ASSOCIATE

Databricks Certified Data Analyst AssociateDATABRICKS-CERTIFIED-DATA-ENGINEER-ASSOCIATE

Databricks Certified Data Engineer AssociateDATABRICKS-CERTIFIED-GENERATIVE-AI-ENGINEER-ASSOCIATE

Databricks Certified Generative AI Engineer AssociateDATABRICKS-CERTIFIED-PROFESSIONAL-DATA-ENGINEER

Databricks Certified Data Engineer ProfessionalDATABRICKS-CERTIFIED-PROFESSIONAL-DATA-SCIENTIST

Databricks Certified Professional Data ScientistDATABRICKS-MACHINE-LEARNING-ASSOCIATE

Databricks Certified Machine Learning AssociateDATABRICKS-MACHINE-LEARNING-PROFESSIONAL

Databricks Certified Machine Learning Professional

Tips on How to Prepare for the Exams

Nowadays, the certification exams become more and more important and required by more and more enterprises when applying for a job. But how to prepare for the exam effectively? How to prepare for the exam in a short time with less efforts? How to get a ideal result and how to find the most reliable resources? Here on Vcedump.com, you will find all the answers. Vcedump.com provide not only Databricks exam questions, answers and explanations but also complete assistance on your exam preparation and certification application. If you are confused on your DATABRICKS-CERTIFIED-DATA-ENGINEER-ASSOCIATE exam preparations and Databricks certification application, do not hesitate to visit our Vcedump.com to find your solutions here.