DATABRICKS-CERTIFIED-ASSOCIATE-DEVELOPER-FOR-APACHE-SPARK-35 Exam Details

-

Exam Code

:DATABRICKS-CERTIFIED-ASSOCIATE-DEVELOPER-FOR-APACHE-SPARK-35 -

Exam Name

:Databricks Certified Associate Developer for Apache Spark 3.5-Python -

Certification

:Databricks Certifications -

Vendor

:Databricks -

Total Questions

:85 Q&As -

Last Updated

:Jan 09, 2026

Databricks DATABRICKS-CERTIFIED-ASSOCIATE-DEVELOPER-FOR-APACHE-SPARK-35 Online Questions & Answers

-

Question 1:

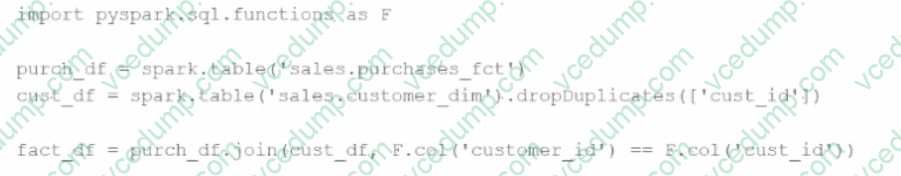

A developer is trying to join two tables,sales.purchases_fctandsales.customer_dim, using the following code:

fact_df = purch_df.join(cust_df, F.col('customer_id') == F.col('custid'))

The developer has discovered that customers in thepurchases_fcttable that do not exist in thecustomer_dimtable are being dropped from the joined table.

Which change should be made to the code to stop these customer records from being dropped?

A. fact_df = purch_df.join(cust_df, F.col('customer_id') == F.col('custid'), 'left')

B. fact_df = cust_df.join(purch_df, F.col('customer_id') == F.col('custid'))

C. fact_df = purch_df.join(cust_df, F.col('cust_id') == F.col('customer_id'))

D. fact_df = purch_df.join(cust_df, F.col('customer_id') == F.col('custid'), 'right_outer') -

Question 2:

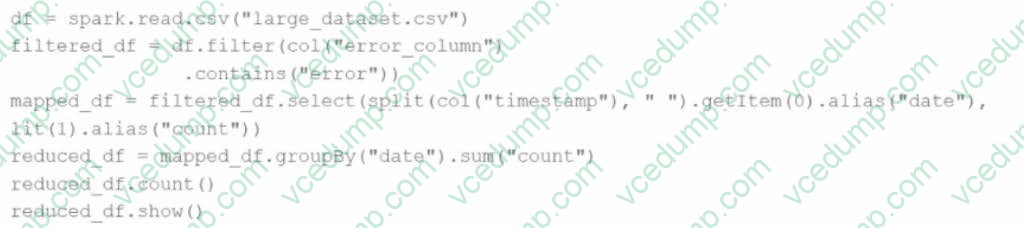

Given the code:

df = spark.read.csv("large_dataset.csv")

filtered_df = df.filter(col("error_column").contains("error"))

mapped_df = filtered_df.select(split(col("timestamp")," ").getItem(0).alias("date"), lit(1).alias("count"))

reduced_df = mapped_df.groupBy("date").sum("count")

reduced_df.count()

reduced_df.show()

At which point will Spark actually begin processing the data?

A. When the filter transformation is applied

B. When the count action is applied

C. When the groupBy transformation is applied

D. When the show action is applied -

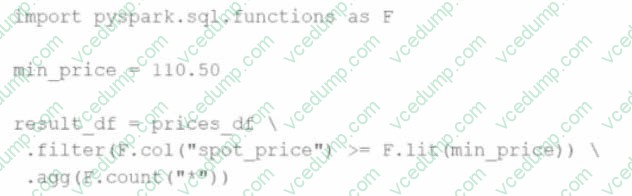

Question 3:

A developer wants to refactor some older Spark code to leverage built-in functions introduced in Spark 3.5.0. The existing code performs array manipulations manually. Which of the following code snippets utilizes new built-in functions in Spark 3.5.0 for array operations?

A. result_df = prices_df \ .withColumn("valid_price", F.when(F.col("spot_price") > F.lit(min_price), 1).otherwise(0))

B. result_df = prices_df \ .agg(F.count_if(F.col("spot_price") >= F.lit(min_price)))

C. result_df = prices_df \ .agg(F.min("spot_price"), F.max("spot_price"))

D. result_df = prices_df \ .agg(F.count("spot_price").alias("spot_price")) \ .filter(F.col("spot_price") > F.lit("min_price")) -

Question 4:

A Spark DataFramedfis cached using theMEMORY_AND_DISKstorage level, but the DataFrame is too large to fit entirely in memory. What is the likely behavior when Spark runs out of memory to store the DataFrame?

A. Spark duplicates the DataFrame in both memory and disk. If it doesn't fit in memory, the DataFrame is stored and retrieved from the disk entirely.

B. Spark splits the DataFrame evenly between memory and disk, ensuring balanced storage utilization.

C. Spark will store as much data as possible in memory and spill the rest to disk when memory is full, continuing processing with performance overhead.

D. Spark stores the frequently accessed rows in memory and less frequently accessed rows on disk, utilizing both resources to offer balanced performance. -

Question 5:

A Spark application suffers from too many small tasks due to excessive partitioning. How can this be fixed without a full shuffle?

A. Use the distinct() transformation to combine similar partitions

B. Use the coalesce() transformation with a lower number of partitions

C. Use the sortBy() transformation to reorganize the data

D. Use the repartition() transformation with a lower number of partitions -

Question 6:

Given the code fragment:

import pyspark.pandas as ps

psdf = ps.DataFrame({'col1': [1, 2], 'col2': [3, 4]})

Which method is used to convert a Pandas API on Spark DataFrame (pyspark.pandas.DataFrame) into a standard PySpark DataFrame (pyspark.sql.DataFrame)?

A. psdf.to_spark()

B. psdf.to_pyspark()

C. psdf.to_pandas()

D. psdf.to_dataframe() -

Question 7:

A developer notices that all the post-shuffle partitions in a dataset are smaller than the value set forspark.sql.adaptive.maxShuffledHashJoinLocalMapThreshold. Which type of join will Adaptive Query Execution (AQE) choose in this case?

A. A Cartesian join

B. A shuffled hash join

C. A broadcast nested loop join

D. A sort-merge join -

Question 8:

A data engineer wants to create an external table from a JSON file located at/data/input.jsonwith the following requirements: Create an external table namedusers Automatically infer schema Merge records with differing schemas Which code snippet should the engineer use?

A. CREATE TABLE users USING json OPTIONS (path '/data/input.json')

B. CREATE EXTERNAL TABLE users USING json OPTIONS (path '/data/input.json')

C. CREATE EXTERNAL TABLE users USING json OPTIONS (path '/data/input.json', mergeSchema 'true')

D. CREATE EXTERNAL TABLE users USING json OPTIONS (path '/data/input.json', schemaMerge 'true') -

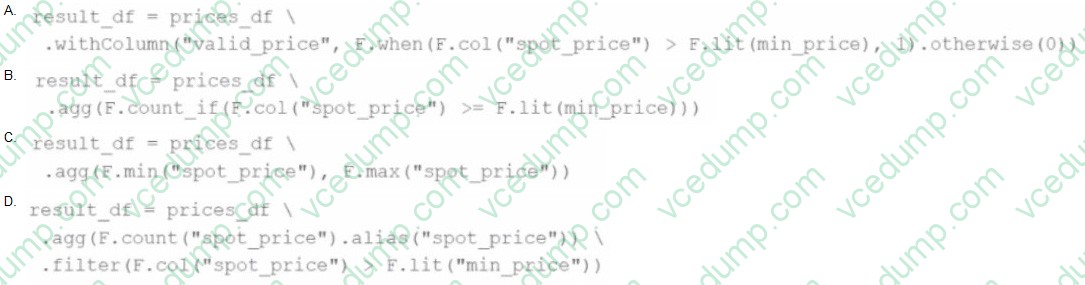

Question 9:

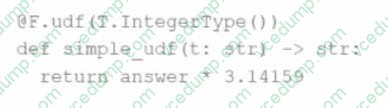

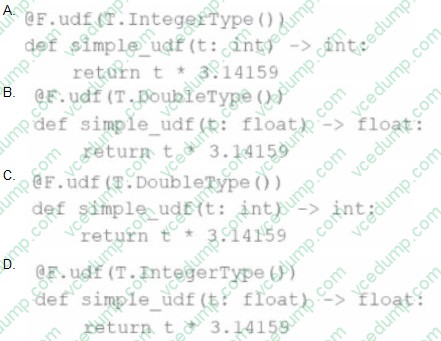

The following code fragment results in an error:

Which code fragment should be used instead?

A. Option A

B. Option B

C. Option C

D. Option D -

Question 10:

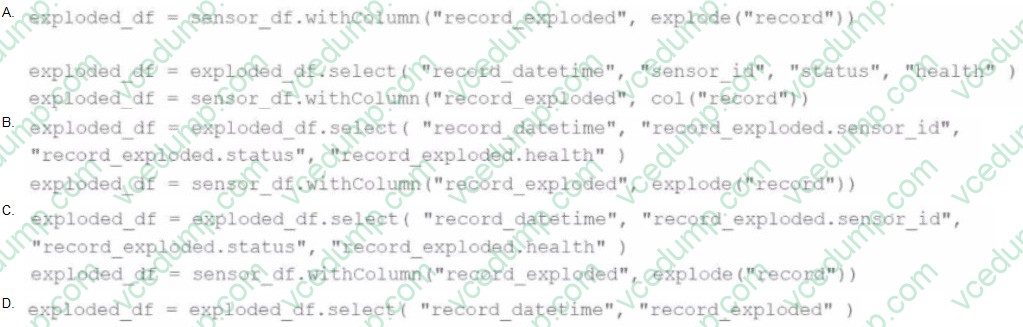

A Data Analyst is working on the DataFramesensor_df, which contains two columns:

Which code fragment returns a DataFrame that splits therecordcolumn into separate columns and has one array item per row?

A. exploded_df = sensor_df.withColumn("record_exploded", explode("record")) exploded_df = exploded_df.select("record_datetime", "sensor_id", "status", "health")

B. exploded_df = exploded_df.select( "record_datetime", "record_exploded.sensor_id", "record_exploded.status", "record_exploded.health" ) exploded_df = sensor_df.withColumn("record_exploded", explode("record"))

C. exploded_df = exploded_df.select( "record_datetime", "record_exploded.sensor_id", "record_exploded.status", "record_exploded.health" ) exploded_df = sensor_df.withColumn("record_exploded", explode("record"))

D. exploded_df = exploded_df.select("record_datetime", "record_exploded")

Related Exams:

-

DATABRICKS-CERTIFIED-ASSOCIATE-DEVELOPER-FOR-APACHE-SPARK

Databricks Certified Associate Developer for Apache Spark 3.0 -

DATABRICKS-CERTIFIED-ASSOCIATE-DEVELOPER-FOR-APACHE-SPARK-35

Databricks Certified Associate Developer for Apache Spark 3.5-Python -

DATABRICKS-CERTIFIED-DATA-ANALYST-ASSOCIATE

Databricks Certified Data Analyst Associate -

DATABRICKS-CERTIFIED-DATA-ENGINEER-ASSOCIATE

Databricks Certified Data Engineer Associate -

DATABRICKS-CERTIFIED-GENERATIVE-AI-ENGINEER-ASSOCIATE

Databricks Certified Generative AI Engineer Associate -

DATABRICKS-CERTIFIED-PROFESSIONAL-DATA-ENGINEER

Databricks Certified Data Engineer Professional -

DATABRICKS-CERTIFIED-PROFESSIONAL-DATA-SCIENTIST

Databricks Certified Professional Data Scientist -

DATABRICKS-MACHINE-LEARNING-ASSOCIATE

Databricks Certified Machine Learning Associate -

DATABRICKS-MACHINE-LEARNING-PROFESSIONAL

Databricks Certified Machine Learning Professional

Tips on How to Prepare for the Exams

Nowadays, the certification exams become more and more important and required by more and more enterprises when applying for a job. But how to prepare for the exam effectively? How to prepare for the exam in a short time with less efforts? How to get a ideal result and how to find the most reliable resources? Here on Vcedump.com, you will find all the answers. Vcedump.com provide not only Databricks exam questions, answers and explanations but also complete assistance on your exam preparation and certification application. If you are confused on your DATABRICKS-CERTIFIED-ASSOCIATE-DEVELOPER-FOR-APACHE-SPARK-35 exam preparations and Databricks certification application, do not hesitate to visit our Vcedump.com to find your solutions here.