Exam Details

Exam Code

:PROFESSIONAL-MACHINE-LEARNING-ENGINEERExam Name

:Professional Machine Learning EngineerCertification

:Google CertificationsVendor

:GoogleTotal Questions

:282 Q&AsLast Updated

:May 24, 2025

Google Google Certifications PROFESSIONAL-MACHINE-LEARNING-ENGINEER Questions & Answers

-

Question 271:

You recently created a new Google Cloud project. After testing that you can submit a Vertex AI Pipeline job from the Cloud Shell, you want to use a Vertex AI Workbench user-managed notebook instance to run your code from that instance. You created the instance and ran the code but this time the job fails with an insufficient permissions error. What should you do?

A. Ensure that the Workbench instance that you created is in the same region of the Vertex AI Pipelines resources you will use.

B. Ensure that the Vertex AI Workbench instance is on the same subnetwork of the Vertex AI Pipeline resources that you will use.

C. Ensure that the Vertex AI Workbench instance is assigned the Identity and Access Management (IAM) Vertex AI User role.

D. Ensure that the Vertex AI Workbench instance is assigned the Identity and Access Management (IAM) Notebooks Runner role.

-

Question 272:

You work for a semiconductor manufacturing company. You need to create a real-time application that automates the quality control process. High-definition images of each semiconductor are taken at the end of the assembly line in real time. The photos are uploaded to a Cloud Storage bucket along with tabular data that includes each semiconductor's batch number, serial number, dimensions, and weight. You need to configure model training and serving while maximizing model accuracy. What should you do?

A. Use Vertex AI Data Labeling Service to label the images, and tram an AutoML image classification model. Deploy the model, and configure Pub/Sub to publish a message when an image is categorized into the failing class.

B. Use Vertex AI Data Labeling Service to label the images, and train an AutoML image classification model. Schedule a daily batch prediction job that publishes a Pub/Sub message when the job completes.

C. Convert the images into an embedding representation. Import this data into BigQuery, and train a BigQuery ML K-means clustering model with two clusters. Deploy the model and configure Pub/Sub to publish a message when a semiconductor's data is categorized into the failing cluster.

D. Import the tabular data into BigQuery, use Vertex AI Data Labeling Service to label the data and train an AutoML tabular classification model. Deploy the model, and configure Pub/Sub to publish a message when a semiconductor's data is categorized into the failing class.

-

Question 273:

You work for a rapidly growing social media company. Your team builds TensorFlow recommender models in an on-premises CPU cluster. The data contains billions of historical user events and 100,000 categorical features. You notice that as the data increases, the model training time increases. You plan to move the models to Google Cloud. You want to use the most scalable approach that also minimizes training time. What should you do?

A. Deploy the training jobs by using TPU VMs with TPUv3 Pod slices, and use the TPUEmbeading API

B. Deploy the training jobs in an autoscaling Google Kubernetes Engine cluster with CPUs

C. Deploy a matrix factorization model training job by using BigQuery ML

D. Deploy the training jobs by using Compute Engine instances with A100 GPUs, and use the tf.nn.embedding_lookup API

-

Question 274:

You are training and deploying updated versions of a regression model with tabular data by using Vertex AI Pipelines, Vertex AI Training, Vertex AI Experiments, and Vertex AI Endpoints. The model is deployed in a Vertex AI endpoint, and your users call the model by using the Vertex AI endpoint. You want to receive an email when the feature data distribution changes significantly, so you can retrigger the training pipeline and deploy an updated version of your model. What should you do?

A. Use Vertex Al Model Monitoring. Enable prediction drift monitoring on the endpoint, and specify a notification email.

B. In Cloud Logging, create a logs-based alert using the logs in the Vertex Al endpoint. Configure Cloud Logging to send an email when the alert is triggered.

C. In Cloud Monitoring create a logs-based metric and a threshold alert for the metric. Configure Cloud Monitoring to send an email when the alert is triggered.

D. Export the container logs of the endpoint to BigQuery. Create a Cloud Function to run a SQL query over the exported logs and send an email. Use Cloud Scheduler to trigger the Cloud Function.

-

Question 275:

You have trained an XGBoost model that you plan to deploy on Vertex AI for online prediction. You are now uploading your model to Vertex AI Model Registry, and you need to configure the explanation method that will serve online prediction requests to be returned with minimal latency. You also want to be alerted when feature attributions of the model meaningfully change over time. What should you do?

A. 1. Specify sampled Shapley as the explanation method with a path count of 5.

2.

Deploy the model to Vertex AI Endpoints.

3.

Create a Model Monitoring job that uses prediction drift as the monitoring objective.

B. 1. Specify Integrated Gradients as the explanation method with a path count of 5.

2.

Deploy the model to Vertex AI Endpoints.

3.

Create a Model Monitoring job that uses prediction drift as the monitoring objective.

C. 1. Specify sampled Shapley as the explanation method with a path count of 50.

2.

Deploy the model to Vertex AI Endpoints.

3.

Create a Model Monitoring job that uses training-serving skew as the monitoring objective.

D. 1. Specify Integrated Gradients as the explanation method with a path count of 50.

2.

Deploy the model to Vertex AI Endpoints.

3.

Create a Model Monitoring job that uses training-serving skew as the monitoring objective.

-

Question 276:

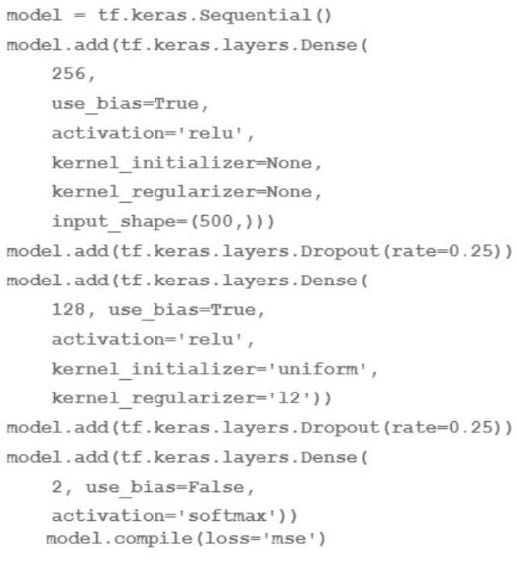

You are going to train a DNN regression model with Keras APIs using this code:

How many trainable weights does your model have? (The arithmetic below is correct.)

A. 501*256+257*128+2 = 161154

B. 500*256+256*128+128*2 = 161024

C. 501*256+257*128+128*2=161408

D. 500*256*0 25+256*128*0 25+128*2 = 40448

-

Question 277:

Your task is classify if a company logo is present on an image. You found out that 96% of a data does not include a logo. You are dealing with data imbalance problem. Which metric do you use to evaluate to model?

A. F1 Score

B. RMSE

C. F Score with higher precision weighting than recall

D. F Score with higher recall weighted than precision

-

Question 278:

You work at a large organization that recently decided to move their ML and data workloads to Google Cloud. The data engineering team has exported the structured data to a Cloud Storage bucket in Avro format. You need to propose a workflow that performs analytics, creates features, and hosts the features that your ML models use for online prediction How should you configure the pipeline?

A. Ingest the Avro files into Cloud Spanner to perform analytics Use a Dataflow pipeline to create the features and store them in BigQuery for online prediction.

B. Ingest the Avro files into BigQuery to perform analytics Use a Dataflow pipeline to create the features, and store them in Vertex Al Feature Store for online prediction.

C. Ingest the Avro files into BigQuery to perform analytics Use BigQuery SQL to create features and store them in a separate BigQuery table for online prediction.

D. Ingest the Avro files into Cloud Spanner to perform analytics. Use a Dataflow pipeline to create the features. and store them in Vertex Al Feature Store for online prediction.

-

Question 279:

You work at a gaming startup that has several terabytes of structured data in Cloud Storage. This data includes gameplay time data user metadata and game metadata. You want to build a model that recommends new games to users that

requires the least amount of coding.

What should you do?

A. Load the data in BigQuery Use BigQuery ML to tram an Autoencoder model.

B. Load the data in BigQuery Use BigQuery ML to train a matrix factorization model.

C. Read data to a Vertex Al Workbench notebook Use TensorFlow to train a two-tower model.

D. Read data to a Vertex AI Workbench notebook Use TensorFlow to train a matrix factorization model.

-

Question 280:

You are developing a custom image classification model in Python. You plan to run your training application on Vertex Al Your input dataset contains several hundred thousand small images You need to determine how to store and access the images for training. You want to maximize data throughput and minimize training time while reducing the amount of additional code. What should you do?

A. Store image files in Cloud Storage and access them directly.

B. Store image files in Cloud Storage and access them by using serialized records.

C. Store image files in Cloud Filestore, and access them by using serialized records.

D. Store image files in Cloud Filestore and access them directly by using an NFS mount point.

Related Exams:

ADWORDS-DISPLAY

Google AdWords: Display AdvertisingADWORDS-FUNDAMENTALS

Google AdWords: FundamentalsADWORDS-MOBILE

Google AdWords: Mobile AdvertisingADWORDS-REPORTING

Google AdWords: ReportingADWORDS-SEARCH

Google AdWords: Search AdvertisingADWORDS-SHOPPING

Google AdWords: Shopping AdvertisingADWORDS-VIDEO

Google AdWords: Video AdvertisingAPIGEE-API-ENGINEER

Apigee Certified API EngineerASSOCIATE-ANDROID-DEVELOPER

Associate Android Developer (Kotlin and Java)ASSOCIATE-CLOUD-ENGINEER

Associate Cloud Engineer

Tips on How to Prepare for the Exams

Nowadays, the certification exams become more and more important and required by more and more enterprises when applying for a job. But how to prepare for the exam effectively? How to prepare for the exam in a short time with less efforts? How to get a ideal result and how to find the most reliable resources? Here on Vcedump.com, you will find all the answers. Vcedump.com provide not only Google exam questions, answers and explanations but also complete assistance on your exam preparation and certification application. If you are confused on your PROFESSIONAL-MACHINE-LEARNING-ENGINEER exam preparations and Google certification application, do not hesitate to visit our Vcedump.com to find your solutions here.