Exam Details

Exam Code

:PROFESSIONAL-MACHINE-LEARNING-ENGINEERExam Name

:Professional Machine Learning EngineerCertification

:Google CertificationsVendor

:GoogleTotal Questions

:282 Q&AsLast Updated

:Jul 07, 2025

Google Google Certifications PROFESSIONAL-MACHINE-LEARNING-ENGINEER Questions & Answers

-

Question 131:

You are responsible for building a unified analytics environment across a variety of on-premises data marts. Your company is experiencing data quality and security challenges when integrating data across the servers, caused by the use of a wide range of disconnected tools and temporary solutions. You need a fully managed, cloud-native data integration service that will lower the total cost of work and reduce repetitive work. Some members on your team prefer a codeless interface for building Extract, Transform, Load (ETL) process. Which service should you use?

A. Dataflow

B. Dataprep

C. Apache Flink

D. Cloud Data Fusion

-

Question 132:

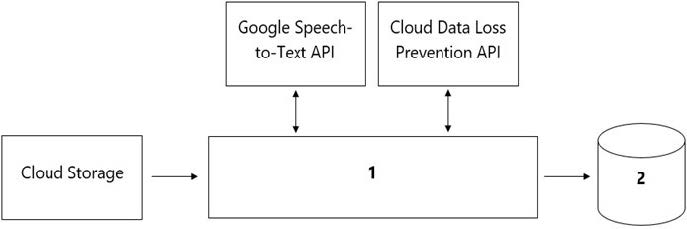

Your organization's call center has asked you to develop a model that analyzes customer sentiments in each call. The call center receives over one million calls daily, and data is stored in Cloud Storage. The data collected must not leave the region in which the call originated, and no Personally Identifiable Information (PII) can be stored or analyzed. The data science team has a third-party tool for visualization and access which requires a SQL ANSI-2011 compliant interface. You need to select components for data processing and for analytics. How should the data pipeline be designed?

A. 1= Dataflow, 2= BigQuery

B. 1 = Pub/Sub, 2= Datastore

C. 1 = Dataflow, 2 = Cloud SQL

D. 1 = Cloud Function, 2= Cloud SQL

-

Question 133:

You are an ML engineer at a global shoe store. You manage the ML models for the company's website. You are asked to build a model that will recommend new products to the user based on their purchase behavior and similarity with other users. What should you do?

A. Build a classification model

B. Build a knowledge-based filtering model

C. Build a collaborative-based filtering model

D. Build a regression model using the features as predictors

-

Question 134:

You recently developed a deep learning model. To test your new model, you trained it for a few epochs on a large dataset. You observe that the training and validation losses barely changed during the training run. You want to quickly debug your model. What should you do first?

A. Verify that your model can obtain a low loss on a small subset of the dataset

B. Add handcrafted features to inject your domain knowledge into the model

C. Use the Vertex AI hyperparameter tuning service to identify a better learning rate

D. Use hardware accelerators and train your model for more epochs

-

Question 135:

You have deployed multiple versions of an image classification model on AI Platform. You want to monitor the performance of the model versions over time. How should you perform this comparison?

A. Compare the loss performance for each model on a held-out dataset.

B. Compare the loss performance for each model on the validation data.

C. Compare the receiver operating characteristic (ROC) curve for each model using the What-If Tool.

D. Compare the mean average precision across the models using the Continuous Evaluation feature.

-

Question 136:

You trained a text classification model. You have the following SignatureDefs:

You started a TensorFlow-serving component server and tried to send an HTTP request to get a prediction using:

headers = {"content-type": "application/json"}

json_response = requests.post('http: //localhost:8501/v1/models/text_model:predict', data=data, headers=headers)

What is the correct way to write the predict request?

A. data = json.dumps({"signature_name": "seving_default", "instances" [[`ab', `bc', `cd']]})

B. data = json.dumps({"signature_name": "serving_default", "instances" [[`a', `b', `c', `d', `e', `f']]})

C. data = json.dumps({"signature_name": "serving_default", "instances" [[`a', `b', `c'], [`d', `e', `f']]})

D. data = json.dumps({"signature_name": "serving_default", "instances" [[`a', `b'], [`c', `d'], [`e', `f']]})

-

Question 137:

You are training an LSTM-based model on AI Platform to summarize text using the following job submission script:

gcloud ai-platform jobs submit training $JOB_NAME \

--package-path $TRAINER_PACKAGE_PATH \

--module-name $MAIN_TRAINER_MODULE \

--job-dir $JOB_DIR \

--region $REGION \

--scale-tier basic \

-- \

--epochs 20 \

--batch_size=32 \

--learning_rate=0.001 \

You want to ensure that training time is minimized without significantly compromising the accuracy of your model. What should you do?

A. Modify the `epochs' parameter.

B. Modify the `scale-tier' parameter.

C. Modify the `batch size' parameter.

D. Modify the `learning rate' parameter.

-

Question 138:

You are an ML engineer at a large grocery retailer with stores in multiple regions. You have been asked to create an inventory prediction model. Your model's features include region, location, historical demand, and seasonal popularity. You want the algorithm to learn from new inventory data on a daily basis. Which algorithms should you use to build the model?

A. Classification

B. Reinforcement Learning

C. Recurrent Neural Networks (RNN)

D. Convolutional Neural Networks (CNN)

-

Question 139:

You are building a real-time prediction engine that streams files which may contain Personally Identifiable Information (PII) to Google Cloud. You want to use the Cloud Data Loss Prevention (DLP) API to scan the files. How should you ensure that the PII is not accessible by unauthorized individuals?

A. Stream all files to Google Cloud, and then write the data to BigQuery. Periodically conduct a bulk scan of the table using the DLP API.

B. Stream all files to Google Cloud, and write batches of the data to BigQuery. While the data is being written to BigQuery, conduct a bulk scan of the data using the DLP API.

C. Create two buckets of data: Sensitive and Non-sensitive. Write all data to the Non-sensitive bucket. Periodically conduct a bulk scan of that bucket using the DLP API, and move the sensitive data to the Sensitive bucket.

D. Create three buckets of data: Quarantine, Sensitive, and Non-sensitive. Write all data to the Quarantine bucket. Periodically conduct a bulk scan of that bucket using the DLP API, and move the data to either the Sensitive or Non-Sensitive bucket.

-

Question 140:

You have written unit tests for a Kubeflow Pipeline that require custom libraries. You want to automate the execution of unit tests with each new push to your development branch in Cloud Source Repositories. What should you do?

A. Write a script that sequentially performs the push to your development branch and executes the unit tests on Cloud Run.

B. Using Cloud Build, set an automated trigger to execute the unit tests when changes are pushed to your development branch.

C. Set up a Cloud Logging sink to a Pub/Sub topic that captures interactions with Cloud Source Repositories. Configure a Pub/Sub trigger for Cloud Run, and execute the unit tests on Cloud Run.

D. Set up a Cloud Logging sink to a Pub/Sub topic that captures interactions with Cloud Source Repositories. Execute the unit tests using a Cloud Function that is triggered when messages are sent to the Pub/Sub topic.

Related Exams:

ADWORDS-DISPLAY

Google AdWords: Display AdvertisingADWORDS-FUNDAMENTALS

Google AdWords: FundamentalsADWORDS-MOBILE

Google AdWords: Mobile AdvertisingADWORDS-REPORTING

Google AdWords: ReportingADWORDS-SEARCH

Google AdWords: Search AdvertisingADWORDS-SHOPPING

Google AdWords: Shopping AdvertisingADWORDS-VIDEO

Google AdWords: Video AdvertisingAPIGEE-API-ENGINEER

Apigee Certified API EngineerASSOCIATE-ANDROID-DEVELOPER

Associate Android Developer (Kotlin and Java)ASSOCIATE-CLOUD-ENGINEER

Associate Cloud Engineer

Tips on How to Prepare for the Exams

Nowadays, the certification exams become more and more important and required by more and more enterprises when applying for a job. But how to prepare for the exam effectively? How to prepare for the exam in a short time with less efforts? How to get a ideal result and how to find the most reliable resources? Here on Vcedump.com, you will find all the answers. Vcedump.com provide not only Google exam questions, answers and explanations but also complete assistance on your exam preparation and certification application. If you are confused on your PROFESSIONAL-MACHINE-LEARNING-ENGINEER exam preparations and Google certification application, do not hesitate to visit our Vcedump.com to find your solutions here.