Exam Details

Exam Code

:HDPCDExam Name

:Hortonworks Data Platform Certified DeveloperCertification

:Hortonworks CertificationsVendor

:HortonworksTotal Questions

:60 Q&AsLast Updated

:Jul 03, 2025

Hortonworks Hortonworks Certifications HDPCD Questions & Answers

-

Question 1:

What types of algorithms are difficult to express in MapReduce v1 (MRv1)?

A. Algorithms that require applying the same mathematical function to large numbers of individual binary records.

B. Relational operations on large amounts of structured and semi-structured data.

C. Algorithms that require global, sharing states.

D. Large-scale graph algorithms that require one-step link traversal.

E. Text analysis algorithms on large collections of unstructured text (e.g, Web crawls).

-

Question 2:

Which one of the following statements is true regarding a MapReduce job?

A. The job's Partitioner shuffles and sorts all (key.value) pairs and sends the output to all reducers

B. The default Hash Partitioner sends key value pairs with the same key to the same Reducer

C. The reduce method is invoked once for each unique value

D. The Mapper must sort its output of (key.value) pairs in descending order based on value

-

Question 3:

Determine which best describes when the reduce method is first called in a MapReduce job?

A. Reducers start copying intermediate key-value pairs from each Mapper as soon as it has completed. The programmer can configure in the job what percentage of the intermediate data should arrive before the reduce method begins.

B. Reducers start copying intermediate key-value pairs from each Mapper as soon as it has completed. The reduce method is called only after all intermediate data has been copied and sorted.

C. Reduce methods and map methods all start at the beginning of a job, in order to provide optimal performance for map-only or reduce-only jobs.

D. Reducers start copying intermediate key-value pairs from each Mapper as soon as it has completed. The reduce method is called as soon as the intermediate key-value pairs start to arrive.

-

Question 4:

The Hadoop framework provides a mechanism for coping with machine issues such as faulty configuration or impending hardware failure. MapReduce detects that one or a number of machines are performing poorly and starts more copies of a map or reduce task. All the tasks run simultaneously and the task finish first are used. This is called:

A. Combine

B. IdentityMapper

C. IdentityReducer

D. Default Partitioner

E. Speculative Execution

-

Question 5:

How are keys and values presented and passed to the reducers during a standard sort and shuffle phase of MapReduce?

A. Keys are presented to reducer in sorted order; values for a given key are not sorted.

B. Keys are presented to reducer in sorted order; values for a given key are sorted in ascending order.

C. Keys are presented to a reducer in random order; values for a given key are not sorted.

D. Keys are presented to a reducer in random order; values for a given key are sorted in ascending order.

-

Question 6:

Which one of the following is NOT a valid Oozie action?

A. mapreduce

B. pig

C. hive

D. mrunit

-

Question 7:

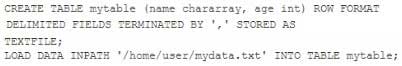

Given the following Hive commands:

Which one of the following statements Is true?

A. The file mydata.txt is copied to a subfolder of /apps/hive/warehouse

B. The file mydata.txt is moved to a subfolder of /apps/hive/warehouse

C. The file mydata.txt is copied into Hive's underlying relational database 0.

D. The file mydata.txt does not move from Its current location in HDFS

-

Question 8:

Which Hadoop component is responsible for managing the distributed file system metadata?

A. NameNode

B. Metanode

C. DataNode

D. NameSpaceManager

-

Question 9:

You need to run the same job many times with minor variations. Rather than hardcoding all job configuration options in your drive code, you've decided to have your Driver subclass org.apache.hadoop.conf.Configured and implement the org.apache.hadoop.util.Tool interface.

Indentify which invocation correctly passes.mapred.job.name with a value of Example to Hadoop?

A. hadoop "mapred.job.name=Example" MyDriver input output

B. hadoop MyDriver mapred.job.name=Example input output

C. hadoop MyDrive -D mapred.job.name=Example input output

D. hadoop setproperty mapred.job.name=Example MyDriver input output

E. hadoop setproperty ("mapred.job.name=Example") MyDriver input output

-

Question 10:

Given a directory of files with the following structure: line number, tab character, string: Example: 1abialkjfjkaoasdfjksdlkjhqweroij 2kadfjhuwqounahagtnbvaswslmnbfgy 3kjfteiomndscxeqalkzhtopedkfsikj You want to send each line as one record to your Mapper. Which InputFormat should you use to complete

the line: conf.setInputFormat (____.class) ; ?

A. SequenceFileAsTextInputFormat

B. SequenceFileInputFormat

C. KeyValueFileInputFormat

D. BDBInputFormat

Related Exams:

Tips on How to Prepare for the Exams

Nowadays, the certification exams become more and more important and required by more and more enterprises when applying for a job. But how to prepare for the exam effectively? How to prepare for the exam in a short time with less efforts? How to get a ideal result and how to find the most reliable resources? Here on Vcedump.com, you will find all the answers. Vcedump.com provide not only Hortonworks exam questions, answers and explanations but also complete assistance on your exam preparation and certification application. If you are confused on your HDPCD exam preparations and Hortonworks certification application, do not hesitate to visit our Vcedump.com to find your solutions here.