Exam Details

Exam Code

:DATABRICKS-MACHINE-LEARNING-PROFESSIONALExam Name

:Databricks Certified Machine Learning ProfessionalCertification

:Databricks CertificationsVendor

:DatabricksTotal Questions

:60 Q&AsLast Updated

:Jul 02, 2025

Databricks Databricks Certifications DATABRICKS-MACHINE-LEARNING-PROFESSIONAL Questions & Answers

-

Question 41:

A machine learning engineer wants to log and deploy a model as an MLflow pyfunc model. They have custom preprocessing that needs to be completed on feature variables prior to fitting the model or computing predictions using that model.

They decide to wrap this preprocessing in a custom model class ModelWithPreprocess, where the preprocessing is performed when calling fit and when calling predict. They then log the fitted model of the ModelWithPreprocess class as a

pyfunc model.

Which of the following is a benefit of this approach when loading the logged pyfunc model for downstream deployment?

A. The pyfunc model can be used to deploy models in a parallelizable fashion

B. The same preprocessing logic will automatically be applied when calling fit

C. The same preprocessing logic will automatically be applied when calling predict

D. This approach has no impact when loading the logged pyfunc model for downstream deployment

E. There is no longer a need for pipeline-like machine learning objects

-

Question 42:

Which of the following Databricks-managed MLflow capabilities is a centralized model store?

A. Models

B. Model Registry

C. Model Serving

D. Feature Store

E. Experiments

-

Question 43:

Which of the following MLflow operations can be used to delete a model from the MLflow Model Registry?

A. client.transition_model_version_stage

B. client.delete_model_version

C. client.update_registered_model

D. client.delete_model

E. client.delete_registered_model

-

Question 44:

A machine learning engineer wants to programmatically create a new Databricks Job whose schedule depends on the result of some automated tests in a machine learning pipeline. Which of the following Databricks tools can be used to programmatically create the Job?

A. MLflow APIs

B. AutoML APIs

C. MLflow Client

D. Jobs cannot be created programmatically

E. Databricks REST APIs

-

Question 45:

A data scientist is using MLflow to track their machine learning experiment. As a part of each MLflow run, they are performing hyperparameter tuning. The data scientist would like to have one parent run for the tuning process with a child run for each unique combination of hyperparameter values.

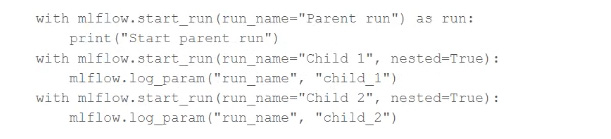

They are using the following code block:

The code block is not nesting the runs in MLflow as they expected.

Which of the following changes does the data scientist need to make to the above code block so that it successfully nests the child runs under the parent run in MLflow?

A. Indent the child run blocks within the parent run block

B. Add the nested=True argument to the parent run

C. Remove the nested=True argument from the child runs

D. Provide the same name to the run_name parameter for all three run blocks

E. Add the nested=True argument to the parent run and remove the nested=True arguments from the child runs

-

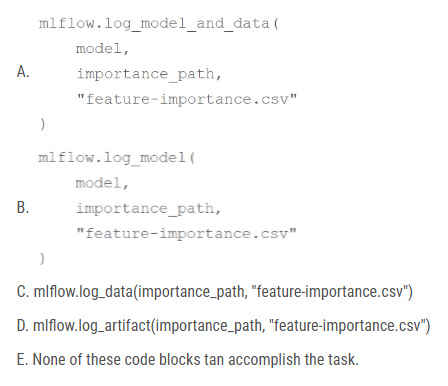

Question 46:

A machine learning engineer wants to log feature importance data from a CSV file at path importance_path with an MLflow run for model model. Which of the following code blocks will accomplish this task inside of an existing MLflow run block?

A. Option A

B. Option B

C. Option C

D. Option D

E. Option E

-

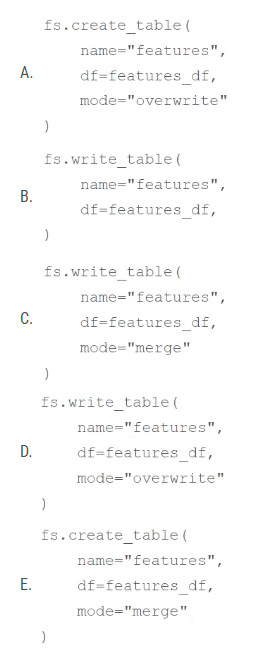

Question 47:

A data scientist has computed updated feature values for all primary key values stored in the Feature Store table features. In addition, feature values for some new primary key values have also been computed. The updated feature values are

stored in the DataFrame features_df. They want to replace all data in features with the newly computed data.

Which of the following code blocks can they use to perform this task using the Feature Store Client fs?

A. Option A

B. Option B

C. Option C

D. Option D

E. Option E

-

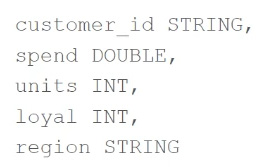

Question 48:

A data scientist has created a Python function compute_features that returns a Spark DataFrame with the following schema

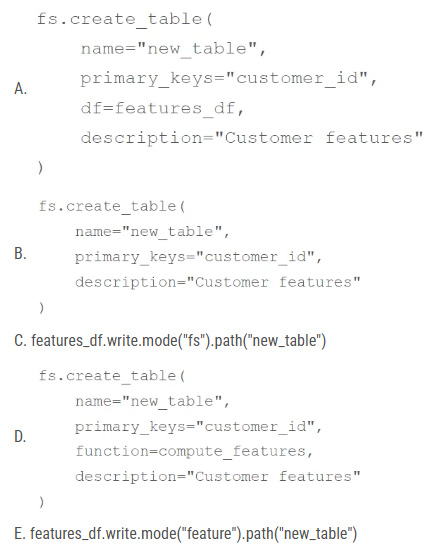

The resulting DataFrame is assigned to the features_df variable. The data scientist wants to create a Feature Store table using features_df. Which of the following code blocks can they use to create and populate the Feature Store table using the Feature Store Client fs?

A. Option A

B. Option B

C. Option C

D. Option D

E. Option E

-

Question 49:

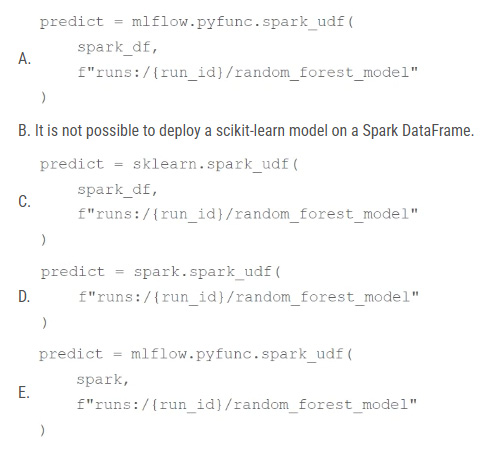

A machine learning engineer has developed a random forest model using scikit-learn, logged the model using MLflow as random_forest_model, and stored its run ID in the run_id Python variable. They now want to deploy that model by performing batch inference on a Spark DataFrame spark_df.

Which of the following code blocks can they use to create a function called predict that they can use to complete the task?

A. Option A

B. Option B

C. Option C

D. Option D

E. Option E

-

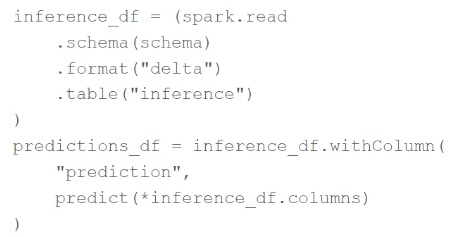

Question 50:

A machine learning engineer is using the following code block as part of a batch deployment pipeline:

Which of the following changes needs to be made so this code block will work when the inference table is a stream source?

A. Replace "inference" with the path to the location of the Delta table

B. Replace schema(schema) with option("maxFilesPerTrigger", 1)

C. Replace spark.read with spark.readStream

D. Replace format("delta") with format("stream")

E. Replace predict with a stream-friendly prediction function

Related Exams:

DATABRICKS-CERTIFIED-ASSOCIATE-DEVELOPER-FOR-APACHE-SPARK

Databricks Certified Associate Developer for Apache Spark 3.0DATABRICKS-CERTIFIED-DATA-ANALYST-ASSOCIATE

Databricks Certified Data Analyst AssociateDATABRICKS-CERTIFIED-DATA-ENGINEER-ASSOCIATE

Databricks Certified Data Engineer AssociateDATABRICKS-CERTIFIED-GENERATIVE-AI-ENGINEER-ASSOCIATE

Databricks Certified Generative AI Engineer AssociateDATABRICKS-CERTIFIED-PROFESSIONAL-DATA-ENGINEER

Databricks Certified Data Engineer ProfessionalDATABRICKS-CERTIFIED-PROFESSIONAL-DATA-SCIENTIST

Databricks Certified Professional Data ScientistDATABRICKS-MACHINE-LEARNING-ASSOCIATE

Databricks Certified Machine Learning AssociateDATABRICKS-MACHINE-LEARNING-PROFESSIONAL

Databricks Certified Machine Learning Professional

Tips on How to Prepare for the Exams

Nowadays, the certification exams become more and more important and required by more and more enterprises when applying for a job. But how to prepare for the exam effectively? How to prepare for the exam in a short time with less efforts? How to get a ideal result and how to find the most reliable resources? Here on Vcedump.com, you will find all the answers. Vcedump.com provide not only Databricks exam questions, answers and explanations but also complete assistance on your exam preparation and certification application. If you are confused on your DATABRICKS-MACHINE-LEARNING-PROFESSIONAL exam preparations and Databricks certification application, do not hesitate to visit our Vcedump.com to find your solutions here.