Exam Details

Exam Code

:DATABRICKS-CERTIFIED-PROFESSIONAL-DATA-ENGINEERExam Name

:Databricks Certified Data Engineer ProfessionalCertification

:Databricks CertificationsVendor

:DatabricksTotal Questions

:120 Q&AsLast Updated

:Jul 02, 2025

Databricks Databricks Certifications DATABRICKS-CERTIFIED-PROFESSIONAL-DATA-ENGINEER Questions & Answers

-

Question 71:

A DLT pipeline includes the following streaming tables:

Raw_lot ingest raw device measurement data from a heart rate tracking device.

Bgm_stats incrementally computes user statistics based on BPM measurements from raw_lot.

How can the data engineer configure this pipeline to be able to retain manually deleted or updated records in the raw_iot table while recomputing the downstream table when a pipeline update is run?

A. Set the skipChangeCommits flag to true on bpm_stats

B. Set the SkipChangeCommits flag to true raw_lot

C. Set the pipelines, reset, allowed property to false on bpm_stats

D. Set the pipelines, reset, allowed property to false on raw_iot

-

Question 72:

Assuming that the Databricks CLI has been installed and configured correctly, which Databricks CLI command can be used to upload a custom Python Wheel to object storage mounted with the DBFS for use with a production job?

A. configure

B. fs

C. jobs

D. libraries

E. workspace

-

Question 73:

The marketing team is looking to share data in an aggregate table with the sales organization, but the field names used by the teams do not match, and a number of marketing specific fields have not been approval for the sales org.

Which of the following solutions addresses the situation while emphasizing simplicity?

A. Create a view on the marketing table selecting only these fields approved for the sales team alias the names of any fields that should be standardized to the sales naming conventions.

B. Use a CTAS statement to create a derivative table from the marketing table configure a production jon to propagation changes.

C. Add a parallel table write to the current production pipeline, updating a new sales table that varies as required from marketing table.

D. Create a new table with the required schema and use Delta Lake's DEEP CLONE functionality to sync up changes committed to one table to the corresponding table.

-

Question 74:

The DevOps team has configured a production workload as a collection of notebooks scheduled to run daily using the Jobs Ul. A new data engineering hire is onboarding to the team and has requested access to one of these notebooks to review the production logic.

What are the maximum notebook permissions that can be granted to the user without allowing accidental changes to production code or data?

A. Can manage

B. Can edit

C. Can run

D. Can Read

-

Question 75:

The data architect has mandated that all tables in the Lakehouse should be configured as external (also known as "unmanaged") Delta Lake tables.

Which approach will ensure that this requirement is met?

A. When a database is being created, make sure that the LOCATION keyword is used.

B. When configuring an external data warehouse for all table storage, leverage Databricks for all ELT.

C. When data is saved to a table, make sure that a full file path is specified alongside the Delta format.

D. When tables are created, make sure that the EXTERNAL keyword is used in the CREATE TABLE statement.

E. When the workspace is being configured, make sure that external cloud object storage has been mounted.

-

Question 76:

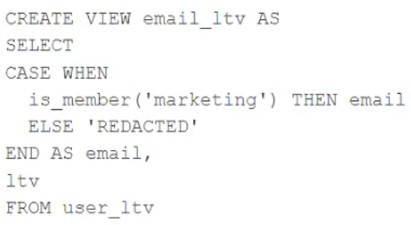

A table named user_ltv is being used to create a view that will be used by data analysts on various teams. Users in the workspace are configured into groups, which are used for setting up data access using ACLs.

The user_ltv table has the following schema: email STRING, age INT, ltv INT

The following view definition is executed:

An analyst who is not a member of the marketing group executes the following query:

SELECT * FROM email_ltv

Which statement describes the results returned by this query?

A. Three columns will be returned, but one column will be named "redacted" and contain only null values.

B. Only the email and itv columns will be returned; the email column will contain all null values.

C. The email and ltv columns will be returned with the values in user itv.

D. The email, age. and ltv columns will be returned with the values in user ltv.

E. Only the email and ltv columns will be returned; the email column will contain the string "REDACTED" in each row.

-

Question 77:

Which statement regarding spark configuration on the Databricks platform is true?

A. Spark configuration properties set for an interactive cluster with the Clusters UI will impact all notebooks attached to that cluster.

B. When the same spar configuration property is set for an interactive to the same interactive cluster.

C. Spark configuration set within an notebook will affect all SparkSession attached to the same interactive cluster

D. The Databricks REST API can be used to modify the Spark configuration properties for an interactive cluster without interrupting jobs.

-

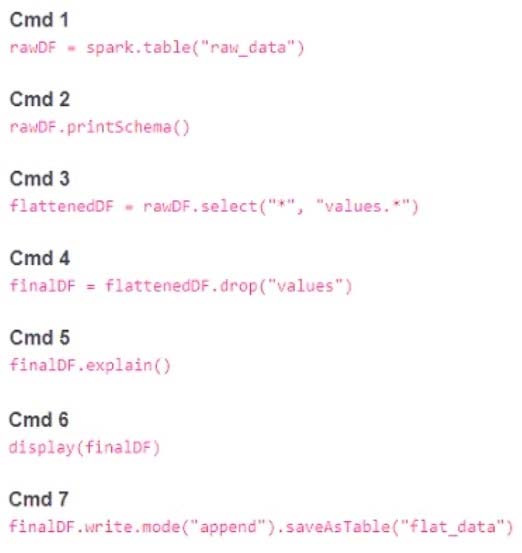

Question 78:

A member of the data engineering team has submitted a short notebook that they wish to schedule as part of a larger data pipeline. Assume that the commands provided below produce the logically correct results when run as presented.

Which command should be removed from the notebook before scheduling it as a job?

A. Cmd 2

B. Cmd 3

C. Cmd 4

D. Cmd 5

E. Cmd 6

-

Question 79:

What is the first of a Databricks Python notebook when viewed in a text editor?

A. %python

B. % Databricks notebook source

C. --Databricks notebook source

D. //Databricks notebook source

-

Question 80:

An upstream system is emitting change data capture (CDC) logs that are being written to a cloud object storage directory. Each record in the log indicates the change type (insert, update, or delete) and the values for each field after the change. The source table has a primary key identified by the field pk_id.

For auditing purposes, the data governance team wishes to maintain a full record of all values that have ever been valid in the source system. For analytical purposes, only the most recent value for each record needs to be recorded. The Databricks job to ingest these records occurs once per hour, but each individual record may have changed multiple times over the course of an hour.

Which solution meets these requirements?

A. Create a separate history table for each pk_id resolve the current state of the table by running a union all filtering the history tables for the most recent state.

B. Use merge into to insert, update, or delete the most recent entry for each pk_id into a bronze table, then propagate all changes throughout the system.

C. Iterate through an ordered set of changes to the table, applying each in turn; rely on Delta Lake's versioning ability to create an audit log.

D. Use Delta Lake's change data feed to automatically process CDC data from an external system, propagating all changes to all dependent tables in the Lakehouse.

E. Ingest all log information into a bronze table; use merge into to insert, update, or delete the most recent entry for each pk_id into a silver table to recreate the current table state.

Related Exams:

DATABRICKS-CERTIFIED-ASSOCIATE-DEVELOPER-FOR-APACHE-SPARK

Databricks Certified Associate Developer for Apache Spark 3.0DATABRICKS-CERTIFIED-DATA-ANALYST-ASSOCIATE

Databricks Certified Data Analyst AssociateDATABRICKS-CERTIFIED-DATA-ENGINEER-ASSOCIATE

Databricks Certified Data Engineer AssociateDATABRICKS-CERTIFIED-GENERATIVE-AI-ENGINEER-ASSOCIATE

Databricks Certified Generative AI Engineer AssociateDATABRICKS-CERTIFIED-PROFESSIONAL-DATA-ENGINEER

Databricks Certified Data Engineer ProfessionalDATABRICKS-CERTIFIED-PROFESSIONAL-DATA-SCIENTIST

Databricks Certified Professional Data ScientistDATABRICKS-MACHINE-LEARNING-ASSOCIATE

Databricks Certified Machine Learning AssociateDATABRICKS-MACHINE-LEARNING-PROFESSIONAL

Databricks Certified Machine Learning Professional

Tips on How to Prepare for the Exams

Nowadays, the certification exams become more and more important and required by more and more enterprises when applying for a job. But how to prepare for the exam effectively? How to prepare for the exam in a short time with less efforts? How to get a ideal result and how to find the most reliable resources? Here on Vcedump.com, you will find all the answers. Vcedump.com provide not only Databricks exam questions, answers and explanations but also complete assistance on your exam preparation and certification application. If you are confused on your DATABRICKS-CERTIFIED-PROFESSIONAL-DATA-ENGINEER exam preparations and Databricks certification application, do not hesitate to visit our Vcedump.com to find your solutions here.