Exam Details

Exam Code

:PROFESSIONAL-CLOUD-DEVELOPERExam Name

:Professional Cloud DeveloperCertification

:Google CertificationsVendor

:GoogleTotal Questions

:254 Q&AsLast Updated

:Jun 28, 2025

Google Google Certifications PROFESSIONAL-CLOUD-DEVELOPER Questions & Answers

-

Question 31:

You are designing a resource-sharing policy for applications used by different teams in a Google Kubernetes Engine cluster. You need to ensure that all applications can access the resources needed to run. What should you do? (Choose two.)

A. Specify the resource limits and requests in the object specifications.

B. Create a namespace for each team, and attach resource quotas to each namespace.

C. Create a LimitRange to specify the default compute resource requirements for each namespace.

D. Create a Kubernetes service account (KSA) for each application, and assign each KSA to the namespace.

E. Use the Anthos Policy Controller to enforce label annotations on all namespaces. Use taints and tolerations to allow resource sharing for namespaces.

-

Question 32:

You are parsing a log file that contains three columns: a timestamp, an account number (a string), and a

transaction amount (a number). You want to calculate the sum of all transaction amounts for each unique

account number efficiently.

Which data structure should you use?

A. A linked list

B. A hash table

C. A two-dimensional array

D. A comma-delimited string

-

Question 33:

You recently developed a new service on Cloud Run. The new service authenticates using a custom service and then writes transactional information to a Cloud Spanner database. You need to verify that your application can support up to 5,000 read and 1,000 write transactions per second while identifying any bottlenecks that occur. Your test infrastructure must be able to autoscale. What should you do?

A. Build a test harness to generate requests and deploy it to Cloud Run. Analyze the VPC Flow Logs using Cloud Logging.

B. Create a Google Kubernetes Engine cluster running the Locust or JMeter images to dynamically generate load tests. Analyze the results using Cloud Trace.

C. Create a Cloud Task to generate a test load. Use Cloud Scheduler to run 60,000 Cloud Task transactions per minute for 10 minutes. Analyze the results using Cloud Monitoring.

D. Create a Compute Engine instance that uses a LAMP stack image from the Marketplace, and use Apache Bench to generate load tests against the service. Analyze the results using Cloud Trace.

-

Question 34:

You are writing a single-page web application with a user-interface that communicates with a third-party API

for content using XMLHttpRequest. The data displayed on the UI by the API results is less critical than other data displayed on the same web page, so it is acceptable for some requests to not have the API data

displayed in the UI. However, calls made to the API should not delay rendering of other parts of the user

interface. You want your application to perform well when the API response is an error or a timeout.

What should you do?

A. Set the asynchronous option for your requests to the API to false and omit the widget displaying the API results when a timeout or error is encountered.

B. Set the asynchronous option for your request to the API to true and omit the widget displaying the API results when a timeout or error is encountered.

C. Catch timeout or error exceptions from the API call and keep trying with exponential backoff until the API response is successful.

D. Catch timeout or error exceptions from the API call and display the error response in the UI widget.

-

Question 35:

Your website is deployed on Compute Engine. Your marketing team wants to test conversion rates between 3

different website designs.

Which approach should you use?

A. Deploy the website on App Engine and use traffic splitting.

B. Deploy the website on App Engine as three separate services.

C. Deploy the website on Cloud Functions and use traffic splitting.

D. Deploy the website on Cloud Functions as three separate functions.

-

Question 36:

You need to containerize a web application that will be hosted on Google Cloud behind a global load balancer with SSL certificates. You don't have the time to develop authentication at the application level, and you want to offload SSL encryption and management from your application. You want to configure the architecture using managed services where possible What should you do?

A. Host the application on Compute Engine, and configure Cloud Endpoints for your application.

B. Host the application on Google Kubernetes Engine and use Identity-Aware Proxy (IAP) with Cloud Load Balancing and Google-managed certificates.

C. Host the application on Google Kubernetes Engine, and deploy an NGINX Ingress Controller to handle authentication.

D. Host the application on Google Kubernetes Engine, and deploy cert-manager to manage SSL certificates.

-

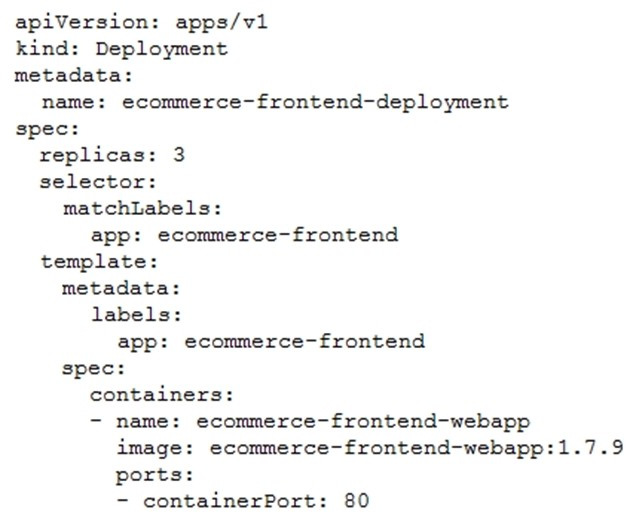

Question 37:

Your application is deployed in a Google Kubernetes Engine (GKE) cluster. When a new version of your application is released, your CI/CD tool updates the spec.template.spec.containers[0].image value to reference the Docker image of your

new application version. When the Deployment object applies the change, you want to deploy at least 1 replica of the new version and maintain the previous replicas until the new replica is healthy.

Which change should you make to the GKE Deployment object shown below?

A. Set the Deployment strategy to RollingUpdate with maxSurge set to 0, maxUnavailable set to 1.

B. Set the Deployment strategy to RollingUpdate with maxSurge set to 1, maxUnavailable set to 0.

C. Set the Deployment strategy to Recreate with maxSurge set to 0, maxUnavailable set to 1.

D. Set the Deployment strategy to Recreate with maxSurge set to 1, maxUnavailable set to 0.

-

Question 38:

You have an application running in a production Google Kubernetes Engine (GKE) cluster. You use Cloud Deploy to automatically deploy your application to your production GKE cluster. As part of your development process: you are planning

to make frequent changes to the applications source code and need to select the tools to test the changes before pushing them to your remote source code repository. Your toolset must meet the following requirements:

?Test frequent local changes automatically.

?Local deployment emulates production deployment.

Which tools should you use to test building and running a container on your laptop using minimal resources'?

A. Terraform and kubeadm

B. Docker Compose and dockerd

C. Minikube and Skaffold

D. kaniko and Tekton

-

Question 39:

Your data is stored in Cloud Storage buckets. Fellow developers have reported that data downloaded from Cloud Storage is resulting in slow API performance. You want to research the issue to provide details to the GCP support team. Which command should you run?

A. gsutil test

-

Question 40:

One of your deployed applications in Google Kubernetes Engine (GKE) is having intermittent performance issues. Your team uses a third-party logging solution. You want to install this solution on each node in your GKE cluster so you can view the logs. What should you do?

A. Deploy the third-party solution as a DaemonSet

B. Modify your container image to include the monitoring software

C. Use SSH to connect to the GKE node, and install the software manually

D. Deploy the third-party solution using Terraform and deploy the logging Pod as a Kubernetes Deployment

Related Exams:

ADWORDS-DISPLAY

Google AdWords: Display AdvertisingADWORDS-FUNDAMENTALS

Google AdWords: FundamentalsADWORDS-MOBILE

Google AdWords: Mobile AdvertisingADWORDS-REPORTING

Google AdWords: ReportingADWORDS-SEARCH

Google AdWords: Search AdvertisingADWORDS-SHOPPING

Google AdWords: Shopping AdvertisingADWORDS-VIDEO

Google AdWords: Video AdvertisingAPIGEE-API-ENGINEER

Apigee Certified API EngineerASSOCIATE-ANDROID-DEVELOPER

Associate Android Developer (Kotlin and Java)ASSOCIATE-CLOUD-ENGINEER

Associate Cloud Engineer

Tips on How to Prepare for the Exams

Nowadays, the certification exams become more and more important and required by more and more enterprises when applying for a job. But how to prepare for the exam effectively? How to prepare for the exam in a short time with less efforts? How to get a ideal result and how to find the most reliable resources? Here on Vcedump.com, you will find all the answers. Vcedump.com provide not only Google exam questions, answers and explanations but also complete assistance on your exam preparation and certification application. If you are confused on your PROFESSIONAL-CLOUD-DEVELOPER exam preparations and Google certification application, do not hesitate to visit our Vcedump.com to find your solutions here.