Exam Details

Exam Code

:DBS-C01Exam Name

:AWS Certified Database - Specialty (DBS-C01)Certification

:Amazon CertificationsVendor

:AmazonTotal Questions

:321 Q&AsLast Updated

:Jul 02, 2025

Amazon Amazon Certifications DBS-C01 Questions & Answers

-

Question 131:

A company is running critical applications on AWS. Most of the application deployments use Amazon Aurora MySQL for the database stack. The company uses AWS CloudFormation to deploy the DB instances.

The company's application team recently implemented a CI/CD pipeline. A database engineer needs to integrate the database deployment CloudFormation stack with the newly built CllCD platform. Updates to the CloudFormation stack must not update existing production database resources.

Which CloudFormation stack policy action should the database engineer implement to meet these requirements?

A. Use a Deny statement for the Update:Modify action on the production database resources.

B. Use a Deny statement for the action on the production database resources.

C. Use a Deny statement for the Update:Delete action on the production database resources.

D. Use a Deny statement for the Update:Replace action on the production database resources.

-

Question 132:

A company is using AWS CloudFormation to provision and manage infrastructure resources, including a production database. During a recent CloudFormation stack update, a database specialist observed that changes were made to a database resource that is named ProductionDatabase. The company wants to prevent changes to only ProductionDatabase during future stack updates.

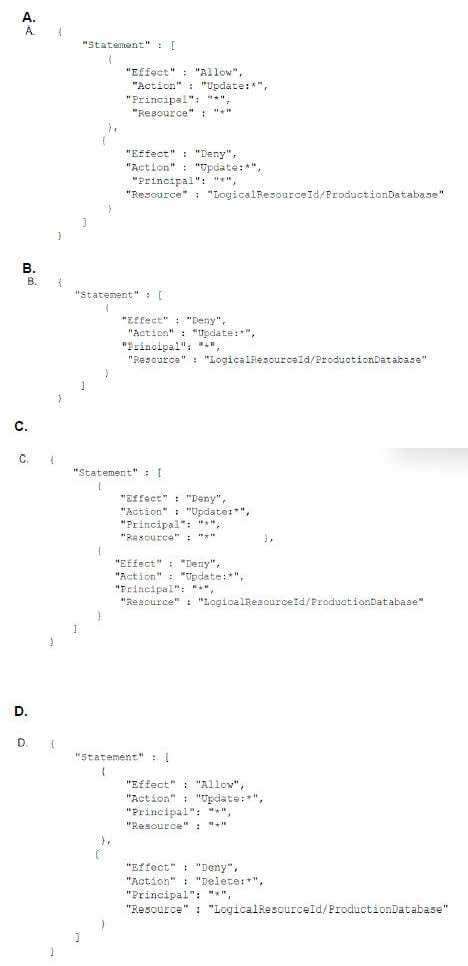

Which stack policy will meet this requirement?

A. Option A

B. Option B

C. Option C

D. Option D

-

Question 133:

A company is running an Amazon RDS for PostgeSQL DB instance and wants to migrate it to an Amazon Aurora PostgreSQL DB cluster. The current database is 1 TB in size. The migration needs to have minimal downtime. What is the FASTEST way to accomplish this?

A. Create an Aurora PostgreSQL DB cluster. Set up replication from the source RDS for PostgreSQL DB instance using AWS DMS to the target DB cluster.

B. Use the pg_dump and pg_restore utilities to extract and restore the RDS for PostgreSQL DB instance to the Aurora PostgreSQL DB cluster.

C. Create a database snapshot of the RDS for PostgreSQL DB instance and use this snapshot to create the Aurora PostgreSQL DB cluster.

D. Migrate data from the RDS for PostgreSQL DB instance to an Aurora PostgreSQL DB cluster using an Aurora Replica. Promote the replica during the cutover.

-

Question 134:

A bank intends to utilize Amazon RDS to host a MySQL database instance. The database should be able to handle high-volume read requests with extremely few repeated queries.

Which solution satisfies these criteria?

A. Create an Amazon ElastiCache cluster. Use a write-through strategy to populate the cache.

B. Create an Amazon ElastiCache cluster. Use a lazy loading strategy to populate the cache.

C. Change the DB instance to Multi-AZ with a standby instance in another AWS Region.

D. Create a read replica of the DB instance. Use the read replica to distribute the read traffic.

-

Question 135:

A business need a data warehouse system that stores data consistently and in a highly organized fashion. The organization demands rapid response times for end-user inquiries including current-year data, and users must have access to the whole 15-year dataset when necessary. Additionally, this solution must be able to manage a variable volume of incoming inquiries. Costs associated with storing the 100 TB of data must be maintained to a minimum.

Which solution satisfies these criteria?

A. Leverage an Amazon Redshift data warehouse solution using a dense storage instance type while keeping all the data on local Amazon Redshift storage. Provision enough instances to support high demand.

B. Leverage an Amazon Redshift data warehouse solution using a dense storage instance to store the most recent data. Keep historical data on Amazon S3 and access it using the Amazon Redshift Spectrum layer. Provision enough instances to support high demand.

C. Leverage an Amazon Redshift data warehouse solution using a dense storage instance to store the most recent data. Keep historical data on Amazon S3 and access it using the Amazon Redshift Spectrum layer. Enable Amazon Redshift Concurrency Scaling.

D. Leverage an Amazon Redshift data warehouse solution using a dense storage instance to store the most recent data. Keep historical data on Amazon S3 and access it using the Amazon Redshift Spectrum layer. Leverage Amazon Redshift elastic resize.

-

Question 136:

A database specialist manages a critical Amazon RDS for MySQL DB instance for a company. The data stored daily could vary from .01% to 10% of the current database size. The database specialist needs to ensure that the DB instance storage grows as needed.

What is the MOST operationally efficient and cost-effective solution?

A. Configure RDS Storage Auto Scaling.

B. Configure RDS instance Auto Scaling.

C. Modify the DB instance allocated storage to meet the forecasted requirements.

D. Monitor the Amazon CloudWatch FreeStorageSpace metric daily and add storage as required.

-

Question 137:

A company maintains several databases using Amazon RDS for MySQL and PostgreSQL. Each RDS database generates log files with retention periods set to their default values. The company has now mandated that database logs be maintained for up to 90 days in a centralized repository to facilitate real-time and after- the-fact analyses.

What should a Database Specialist do to meet these requirements with minimal effort?

A. Create an AWS Lambda function to pull logs from the RDS databases and consolidate the log files in an Amazon S3 bucket. Set a lifecycle policy to expire the objects after 90 days.

B. Modify the RDS databases to publish log to Amazon CloudWatch Logs. Change the log retention policy for each log group to expire the events after 90 days.

C. Write a stored procedure in each RDS database to download the logs and consolidate the log files in an Amazon S3 bucket. Set a lifecycle policy to expire the objects after 90 days.

D. Create an AWS Lambda function to download the logs from the RDS databases and publish the logs to Amazon CloudWatch Logs. Change the log retention policy for the log group to expire the events after 90 days.

-

Question 138:

An application reads and writes data to an Amazon RDS for MySQL DB instance. A new reporting dashboard needs read-only access to the database. When the application and reports are both under heavy load, the database experiences performance degradation. A database specialist needs to improve the database performance.

What should the database specialist do to meet these requirements?

A. Create a read replica of the DB instance. Configure the reports to connect to the replication instance endpoint.

B. Create a read replica of the DB instance. Configure the application and reports to connect to the cluster endpoint.

C. Enable Multi-AZ deployment. Configure the reports to connect to the standby replica.

D. Enable Multi-AZ deployment. Configure the application and reports to connect to the cluster endpoint.

-

Question 139:

A business is operating an on-premises application that is divided into three tiers: web, application, and MySQL database. The database is predominantly accessed during business hours, with occasional bursts of activity throughout the day. As part of the company's shift to AWS, a database expert wants to increase the availability and minimize the cost of the MySQL database tier.

Which MySQL database choice satisfies these criteria?

A. Amazon RDS for MySQL with Multi-AZ

B. Amazon Aurora Serverless MySQL cluster

C. Amazon Aurora MySQL cluster

D. Amazon RDS for MySQL with read replica

-

Question 140:

A pharmaceutical company's drug search API is using an Amazon Neptune DB cluster. A bulk uploader process automatically updates the information in the database a few times each week. A few weeks ago during a bulk upload, a database specialist noticed that the database started to respond frequently with a ThrottlingException error. The problem also occurred with subsequent uploads.

The database specialist must create a solution to prevent ThrottlingException errors for the database. The solution must minimize the downtime of the cluster.

Which solution meets these requirements?

A. Create a read replica that uses a larger instance size than the primary DB instance. Fail over the primary DB instance to the read replica.

B. Add a read replica to each Availability Zone. Use an instance for the read replica that is the same size as the primary DB instance. Keep the traffic between the API and the database within the Availability Zone.

C. Create a read replica that uses a larger instance size than the primary DB instance. Offload the reads from the primary DB instance.

D. Take the latest backup, and restore it in a DB cluster of a larger size. Point the application to the newly created DB cluster.

Related Exams:

AIF-C01

Amazon AWS Certified AI Practitioner (AIF-C01)ANS-C00

AWS Certified Advanced Networking - Specialty (ANS-C00)ANS-C01

AWS Certified Advanced Networking - Specialty (ANS-C01)AXS-C01

AWS Certified Alexa Skill Builder - Specialty (AXS-C01)BDS-C00

AWS Certified Big Data - Speciality (BDS-C00)CLF-C02

AWS Certified Cloud Practitioner (CLF-C02)DAS-C01

AWS Certified Data Analytics - Specialty (DAS-C01)DATA-ENGINEER-ASSOCIATE

AWS Certified Data Engineer - Associate (DEA-C01)DBS-C01

AWS Certified Database - Specialty (DBS-C01)DOP-C02

AWS Certified DevOps Engineer - Professional (DOP-C02)

Tips on How to Prepare for the Exams

Nowadays, the certification exams become more and more important and required by more and more enterprises when applying for a job. But how to prepare for the exam effectively? How to prepare for the exam in a short time with less efforts? How to get a ideal result and how to find the most reliable resources? Here on Vcedump.com, you will find all the answers. Vcedump.com provide not only Amazon exam questions, answers and explanations but also complete assistance on your exam preparation and certification application. If you are confused on your DBS-C01 exam preparations and Amazon certification application, do not hesitate to visit our Vcedump.com to find your solutions here.