Exam Details

Exam Code

:APACHE-HADOOP-DEVELOPERExam Name

:Hadoop 2.0 Certification for Pig and Hive DeveloperCertification

:Hortonworks CertificationsVendor

:HortonworksTotal Questions

:60 Q&AsLast Updated

:Jun 27, 2025

Hortonworks Hortonworks Certifications APACHE-HADOOP-DEVELOPER Questions & Answers

-

Question 51:

You have user profile records in your OLPT database, that you want to join with web logs you have already ingested into the Hadoop file system. How will you obtain these user records?

A. HDFS command

B. Pig LOAD command

C. Sqoop import

D. Hive LOAD DATA command

E. Ingest with Flume agents

F. Ingest with Hadoop Streaming

-

Question 52:

On a cluster running MapReduce v1 (MRv1), a TaskTracker heartbeats into the JobTracker on your cluster, and alerts the JobTracker it has an open map task slot.

What determines how the JobTracker assigns each map task to a TaskTracker?

A. The amount of RAM installed on the TaskTracker node.

B. The amount of free disk space on the TaskTracker node.

C. The number and speed of CPU cores on the TaskTracker node.

D. The average system load on the TaskTracker node over the past fifteen (15) minutes.

E. The location of the InsputSplit to be processed in relation to the location of the node.

-

Question 53:

Which best describes how TextInputFormat processes input files and line breaks?

A. Input file splits may cross line breaks. A line that crosses file splits is read by the RecordReader of the split that contains the beginning of the broken line.

B. Input file splits may cross line breaks. A line that crosses file splits is read by the RecordReaders of both splits containing the broken line.

C. The input file is split exactly at the line breaks, so each RecordReader will read a series of complete lines.

D. Input file splits may cross line breaks. A line that crosses file splits is ignored.

E. Input file splits may cross line breaks. A line that crosses file splits is read by the RecordReader of the split that contains the end of the broken line.

-

Question 54:

Which YARN component is responsible for monitoring the success or failure of a Container?

A. ResourceManager

B. ApplicationMaster

C. NodeManager

D. JobTracker

-

Question 55:

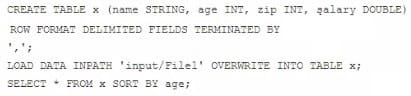

Examine the following Hive statements:

Assuming the statements above execute successfully, which one of the following statements is true?

A. Each reducer generates a file sorted by age

B. The SORT BY command causes only one reducer to be used

C. The output of each reducer is only the age column

D. The output is guaranteed to be a single file with all the data sorted by age

-

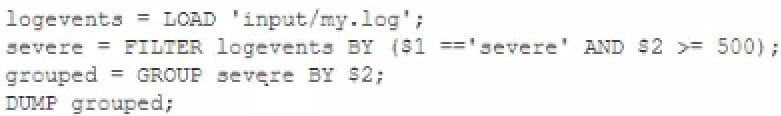

Question 56:

Given the following Pig commands: Which one of the following statements is true?

A. The $1 variable represents the first column of data in 'my.log'

B. The $1 variable represents the second column of data in 'my.log'

C. The severe relation is not valid

D. The grouped relation is not valid

-

Question 57:

Which one of the following Hive commands uses an HCatalog table named x?

A. SELECT * FROM x;

B. SELECT x.-FROM org.apache.hcatalog.hive.HCatLoader('x');

C. SELECT * FROM org.apache.hcatalog.hive.HCatLoader('x');

D. Hive commands cannot reference an HCatalog table

-

Question 58:

In a large MapReduce job with m mappers and n reducers, how many distinct copy operations will there be in the sort/shuffle phase?

A. mXn (i.e., m multiplied by n)

B. n

C. m

D. m+n (i.e., m plus n)

E. mn (i.e., m to the power of n)

-

Question 59:

Which describes how a client reads a file from HDFS?

A. The client queries the NameNode for the block location(s). The NameNode returns the block location(s) to the client. The client reads the data directory off the DataNode(s).

B. The client queries all DataNodes in parallel. The DataNode that contains the requested data responds directly to the client. The client reads the data directly off the DataNode.

C. The client contacts the NameNode for the block location(s). The NameNode then queries the DataNodes for block locations. The DataNodes respond to the NameNode, and the NameNode redirects the client to the DataNode that holds the requested data block(s). The client then reads the data directly off the DataNode.

D. The client contacts the NameNode for the block location(s). The NameNode contacts the DataNode that holds the requested data block. Data is transferred from the DataNode to the NameNode, and then from the NameNode to the client.

-

Question 60:

Which one of the following files is required in every Oozie Workflow application?

A. job.properties

B. Config-default.xml

C. Workflow.xml

D. Oozie.xml

Related Exams:

Tips on How to Prepare for the Exams

Nowadays, the certification exams become more and more important and required by more and more enterprises when applying for a job. But how to prepare for the exam effectively? How to prepare for the exam in a short time with less efforts? How to get a ideal result and how to find the most reliable resources? Here on Vcedump.com, you will find all the answers. Vcedump.com provide not only Hortonworks exam questions, answers and explanations but also complete assistance on your exam preparation and certification application. If you are confused on your APACHE-HADOOP-DEVELOPER exam preparations and Hortonworks certification application, do not hesitate to visit our Vcedump.com to find your solutions here.